介绍

- spark sql 操作example

- spark sql es

- lucene query 语法 es支持底层支持lucene可以做简单查询

尽量使用DataSet/DataFrame 不用Rdd 底层也是用rdd 但是做了优化 不用type es会弃用这个概念

- api灵活

- 高效序列化反序列化、压缩,减少GC

- 适合操作结构化数据可以通过名字或字段来处理或访问数据

code

连接方式

1 | |

这种比较灵活

1 | |

sql操作example

schema

1 | |

1 | |

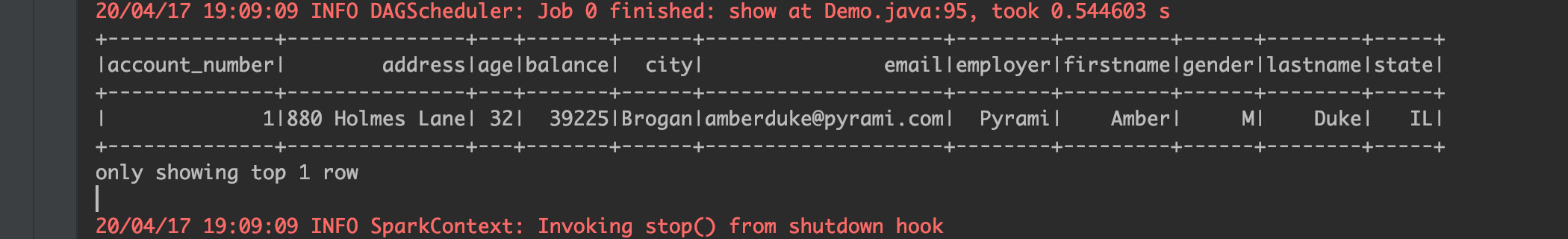

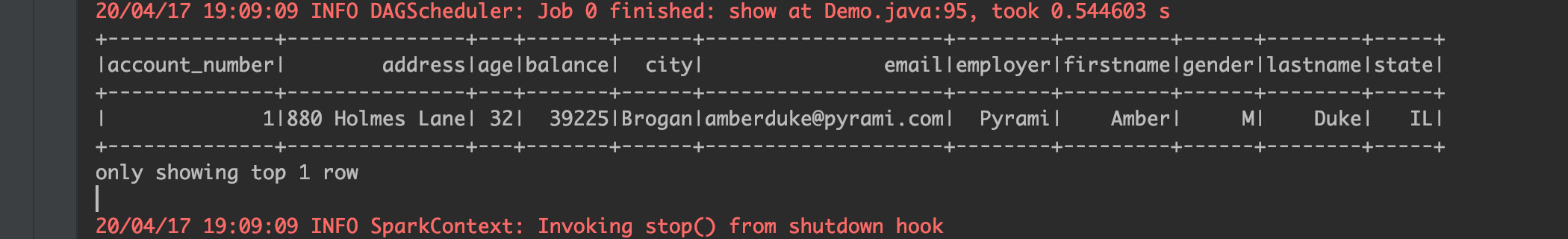

可以打印结果

1 | |

尽量使用DataSet/DataFrame 不用Rdd 底层也是用rdd 但是做了优化 不用type es会弃用这个概念

1 | |

这种比较灵活

1 | |

schema

1 | |

1 | |

可以打印结果

1 | |