ECK

https://www.elastic.co/guide/en/cloud-on-k8s/2.0/k8s-install-helm.html

1 | |

values.yaml

1 | |

image

1 | |

创建Elasticsearch Kibana 实例

1 | |

kafka

1 | |

1 | |

values.yaml

1 | |

1 | |

minio

修改集群配置,operator报错operator TLS secret not found: secrets “operator-tls” not found

1 | |

根据官方文档,不配置这个实际上operator不能正常启动。

镜像

1 | |

1 | |

查看

1 | |

创建 console svc 的nodeport

1 | |

通过访问nodeport访问minio operator管理页面。token通过下面命令获取

1 | |

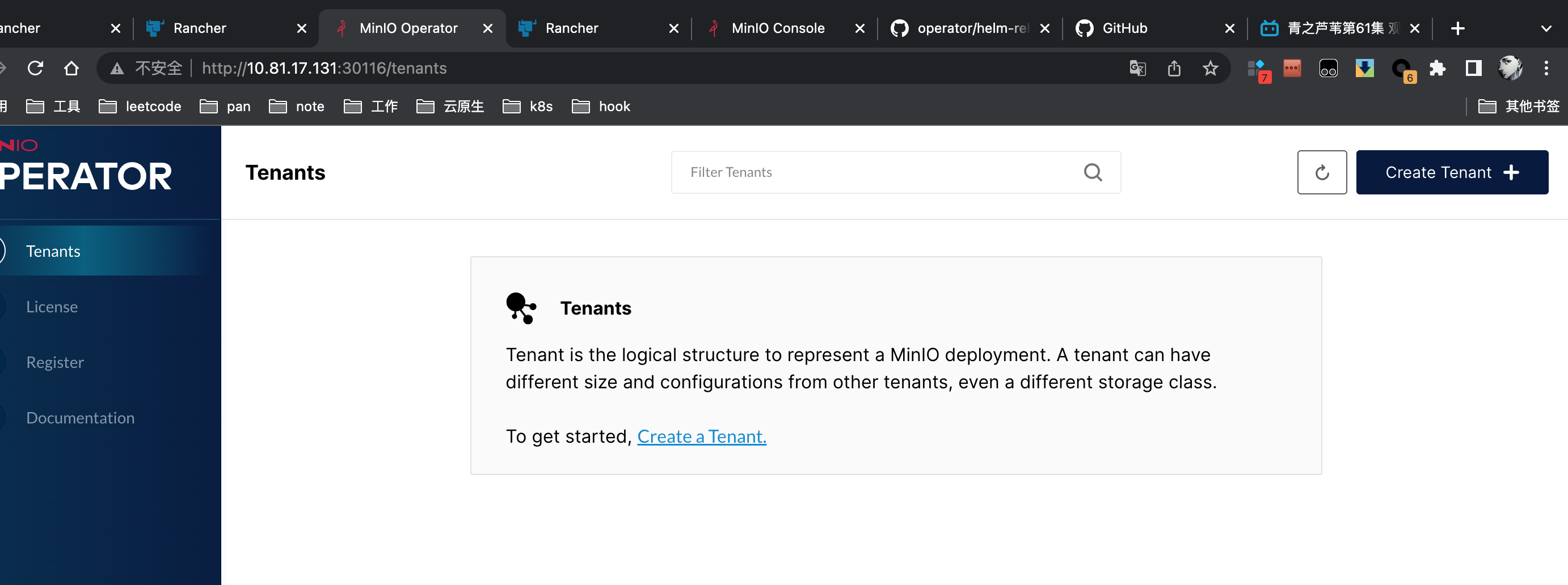

登录后

安装tenant, 一个tenant独占一个namespace

1 | |

1 | |

1 | |

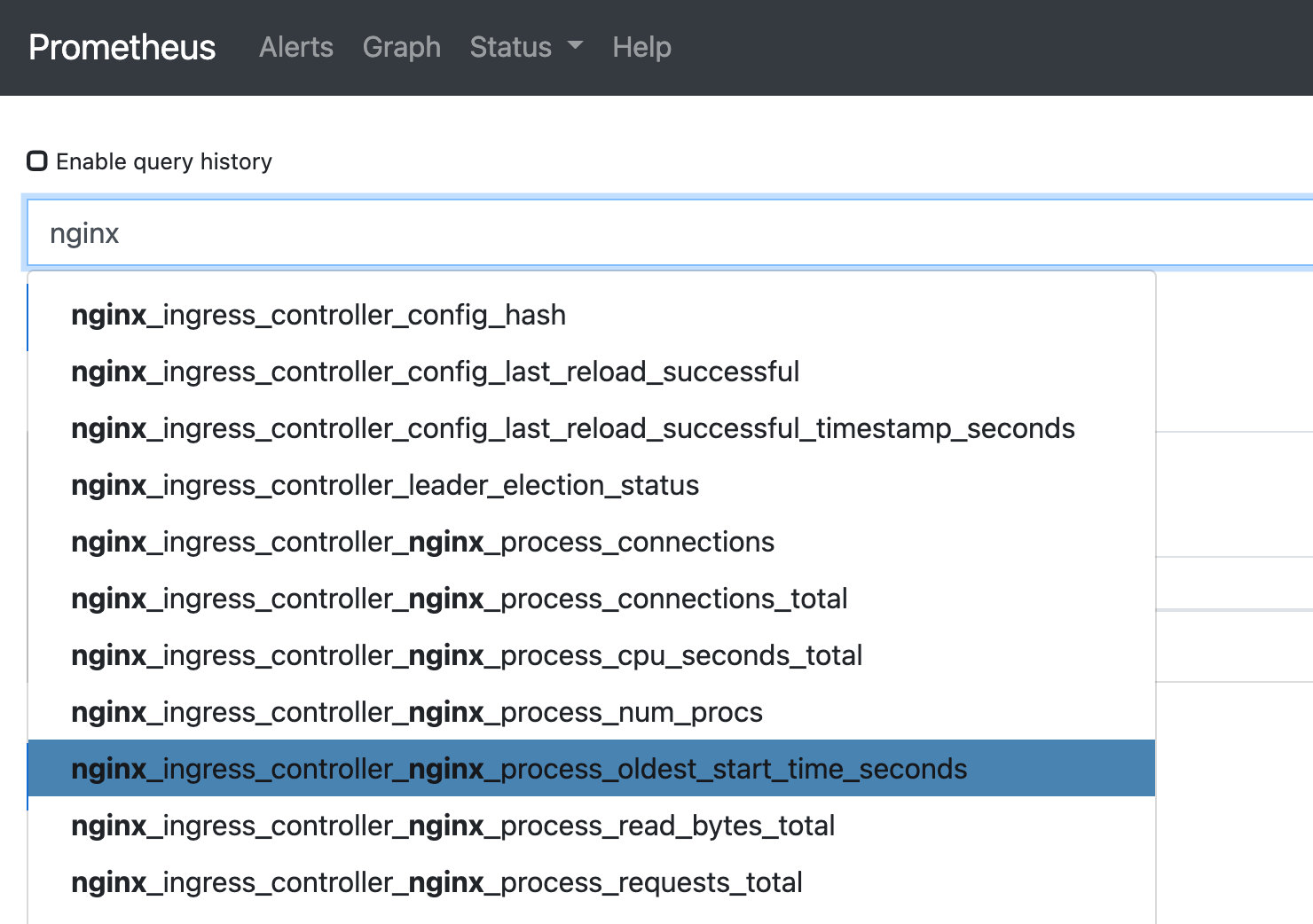

Prometheus

Ingress-nginx

https://kubernetes.github.io/ingress-nginx/user-guide/monitoring/

修改nginx-ingress-controller

1 | |

建立Service

1 | |

检验

1 | |

建立ServiceMonitor

1 | |

能数据收集一段时间

增强exporter

https://github.com/martin-helmich/prometheus-nginxlog-exporter,依赖access.log实现status统计

通过nginx-ingress-controller,sidecar部署

1 | |

配置configmap:ingress-nginx-controller ns:ingress-nginx 参考

1 | |

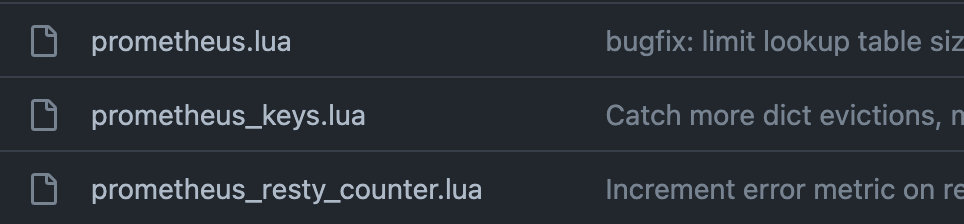

lua exporter

参考:https://github.com/knyar/nginx-lua-prometheus

主要使用ingress-nginx的自定义nginx.conf模板,因为使用lua exporter需要对nginx.conf进行修改。

修改ingress-nginx-controller

1 | |

获取模板

1 | |

修改模板 在http部分,可以根据修改内容,但是修改的位置大概就是这些

1 | |

模板修改完后放入到configmap,同时

lua依赖也放到configmap。

es

https://github.com/prometheus-community/helm-charts/tree/main/charts/prometheus-elasticsearch-exporter

1 | |

1 | |

values.yaml

1 | |

ServiceMonitor

1 | |

kafka

基于jmxPrometheusExporter

1 | |

PodMonitor

1 | |

longhorn

1 | |

查看Node节点的diskUUID

1 | |

1 | |

检查 the replica scheduling will fail

1 | |