安装karmada

helm chart安装

helm https://github.com/karmada-io/karmada/tree/release-1.3/charts

1 | |

karmada.yaml 修改了镜像地址

1 | |

关于helm chart安装证书问题 pull agent连不上host集群

下载dependencies

1 | |

会在helm chart目录下面生成charts目录下载对应的dependencies tgz包

安装

1 | |

1 | |

卸载

1 | |

kubectl-karmada 安装

https://github.com/karmada-io/karmada/releases

1 | |

move kubectl-karmada executable file to PATH path

1 | |

install agent

host 集群上

1 | |

karmada-apiserver.config 修改server成https://host-server-ip:5443

1 | |

agent集群上

agent.yaml 根据karmada-apiserver.config填写证书信息需要base64 decode,根据不同的worker集群修改clusterName

1 | |

安装

1 | |

测试

worker集群

1 | |

host集群

1 | |

安装ANP

anp安装参考 https://karmada.io/docs/userguide/clustermanager/working-with-anp

不然会出现错误,参考这个

1 | |

安装ANP为了,让以pull模式加入member集群和Karmada control plane的网络互通,这样才能让karmada-aggregated-apiserver就可以访问到member集群,方便用户通过karmada来访问成员集群。

构建proxy镜像和证书

1 | |

部署proxy

server

proxy-server.yaml 放到项目根目录

1 | |

replace-proxy-server.sh 证书替换脚本

1 | |

部署到host集群

1 | |

agent

proxy-agent.yaml

1 | |

replace-proxy-agent.sh

1 | |

运行脚本

1 | |

修改karmada-agent deployment

1 | |

添加

- –cluster-api-endpoint k8s集群的apiendpoint,可以参考集群的kubeconfig

- –proxy-server-address http://

:8088 , 代理服务访问到proxy sever

1 | |

8088可以通过源码修改

https://github.com/mrlihanbo/apiserver-network-proxy/blob/v0.0.24/dev/cmd/server/app/server.go#L267.

测试karmada部署

1 | |

nginx.yaml

1 |

|

部署

1 |

|

验证pod分发

1 |

|

结果(检验ANP)

1 |

|

Submariner

Karmada使用 Submariner 连接成员集群之间的网络,Submariner将连接的集群之间的网络扁平化,并实现 Pod 和服务之间的 IP 可达性,与网络插件 (CNI) 无关。

member集群之间的Pod CIDR 和 Service CIDR必须不一样

helm安装

参考https://submariner.io/operations/deployment/helm/

安装准备

准备charts

1 | |

准备镜像

目前还没官方方法支持离线安装,准备需要到镜像

1 | |

安装subctl

https://github.com/submariner-io/releases/releases 下载

在host集群

1 | |

配置member集群节点

member集群其中一个节点配置就可以了

1 | |

参考

- https://github.com/submariner-io/submariner/issues/1926#issuecomment-1188216877

- https://submariner.io/operations/nat-traversal/

- https://github.com/submariner-io/submariner/issues/1649

可能遇到的错误

1 | |

没有gateway这个pod

1 | |

host 集群

1 | |

member集群 安装 operator

1 | |

values.yaml clusterId,clusterCidr,serviceCidr根据member集群实际情况填写,k3s可以cat /etc/systemd/system/k3s.service

1 | |

1 | |

验证

可以把member集群的kubeconfig聚集到host集群,修改

1 | |

检查安装配置排查原因

1 | |

验证正常member集群A, B直接pod service可以互相访问

pod之间可以直接访问

1 | |

service靠service export

Deploy ClusterIP Service

1 | |

Verify

1 | |

原理

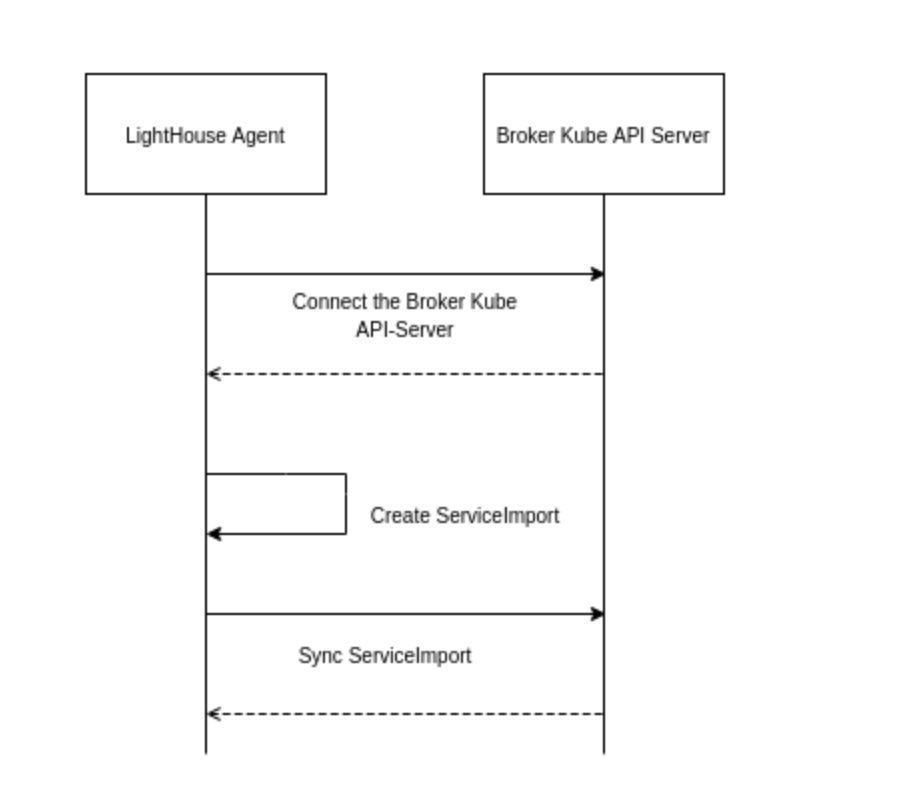

根据submariner官方文档,Lighthouse Agent会自动做这件事

把上面内容放到mcs.yaml

1 | |

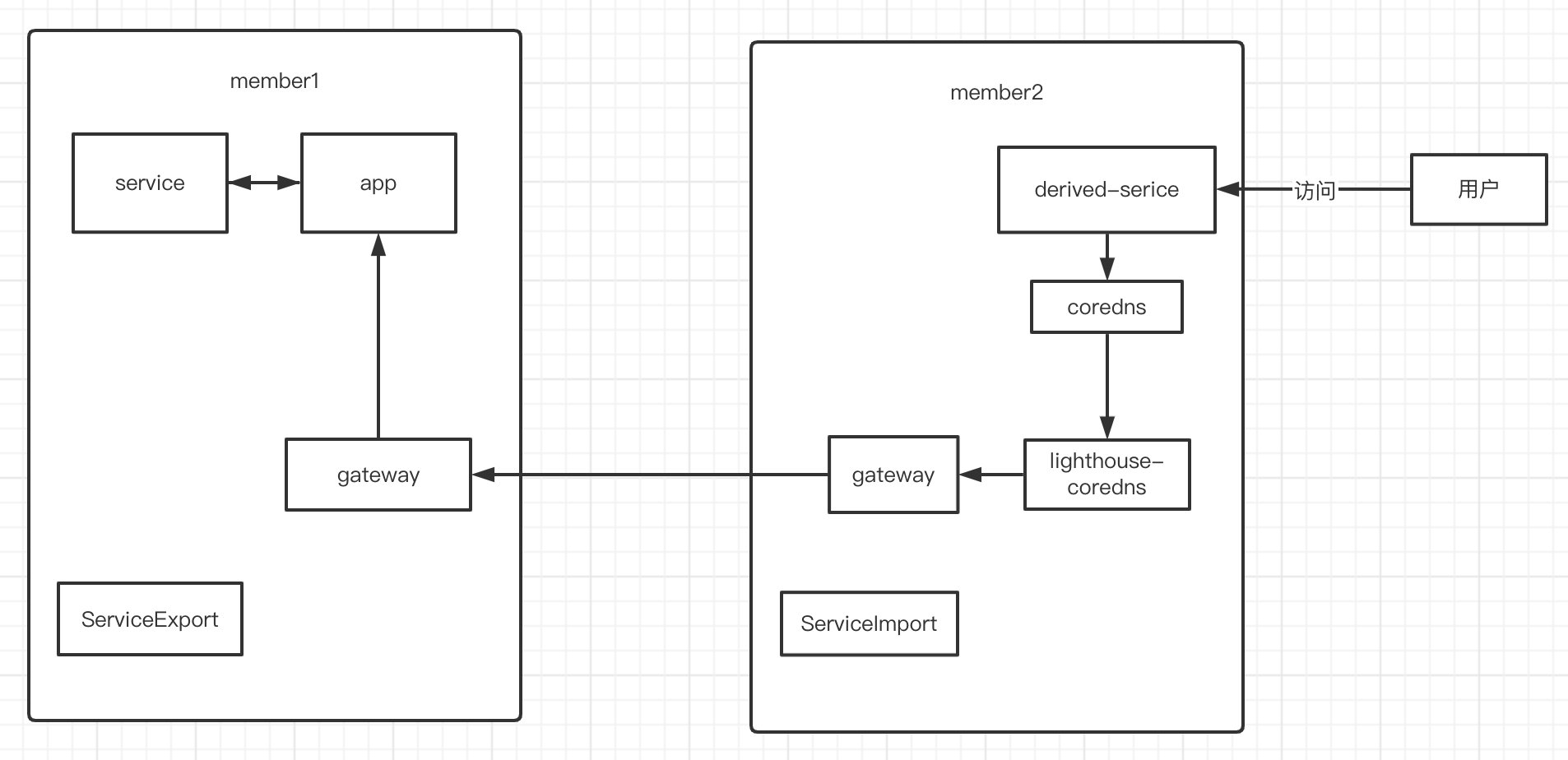

完成后在member2看到以<service-name>-<service-namespace>-<cluster-id>的ServiceImport,可以通过这个svc连接到member1

1 | |

根据Lighthouse DNS Server工作原理需要用到通过clusterset.local进行DNS转发,所以加入dnsConfig更加符合实际使用情况。

mcs_test.yaml

1 | |

验证

1 | |

Multi-cluster Service Discovery(MCS)

-

https://karmada.io/docs/userguide/service/multi-cluster-service

部署MCS程序

前提是调试好Submariner

install crd

1 | |

把应用下发到member1

1 | |

把ServiceExport下发到member1

1 | |

把ServiceImport下发到member2

1 | |

部署

1 | |

可以看到derived-前缀的svc在member2,imported-前缀的endpointSlice

1 | |

测试MCS

1 |

|

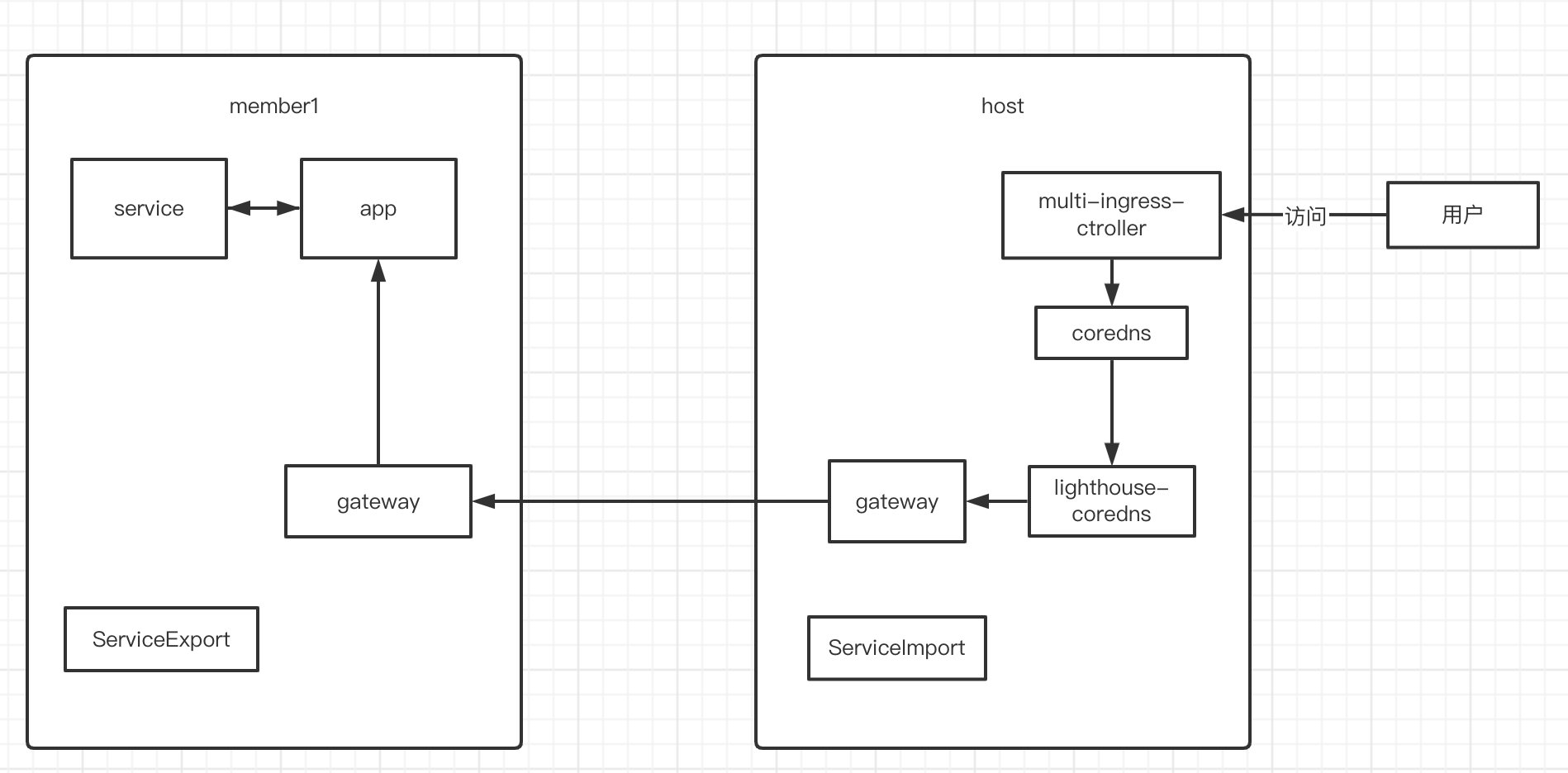

Multi-cluster Ingress(MCI)

https://karmada.io/docs/userguide/service/multi-cluster-ingress

移除traefik

1 | |

编辑服务文件vim /etc/systemd/system/k3s.service并将此行添加到ExecStart

1 | |

重启服务

1 | |

multi-cluster-ingress

https://karmada.io/docs/userguide/service/multi-cluster-ingress

build mci image

根据karmada官网fork来自ingress controller-v1.1.1

1 | |

在根目录,运行构建ingress-controller/controller:1.0.0-dev参考build/dev-env.sh文件

1 | |

部署

ingress_values.yaml ,替换controller的image

1 | |

部署

multi-cluster-ingress-nginx项目,打包helm charts

1 | |

部署

1 | |

为了让nginx-ingress-controller有监听资源的权限(multiclusteringress, endpointslices, and service)。要把karmada-apiserver.config加到nginx-ingress-controller

karmada-kubeconfig-secret.yaml

1 | |

修改deployment

1 | |

Example

1 | |

显示

1 | |

测试

ingress_test.yaml

1 | |

应用部署先部署MCS程序,然后再部署MultiClusterIngress

访问

1 | |

通过proxy api管理member集群

https://karmada.io/docs/userguide/globalview/aggregated-api-endpoint

cluster-proxy-rbac.yaml

1 | |

1 |

|

验证

1 | |

通过Bearer token访问proxy api

member-proxy-rbac.yaml

1 | |

赋予sa,member集群的管理权限

1 | |

cluster-proxy-rbac.yaml 同样的sa

1 | |

在host集群apply

1 |

|

获取sa的token

1 | |

1 | |

简化安装

安装前前置条件

- 集群集之间的Pod CIDR 和 Service CIDR必须不一样

- 集群集版本1.21或以上

karmada

基于karmada1.3的helm chart进行修改,添加ANP相关部署。

镜像准备

证书准备

openssl_host.conf

1 | |

openssl_agent.conf

1 | |

生成证书

1 | |

应答填写

host.yaml

1 | |

agent.yaml

1 | |

host部署

-

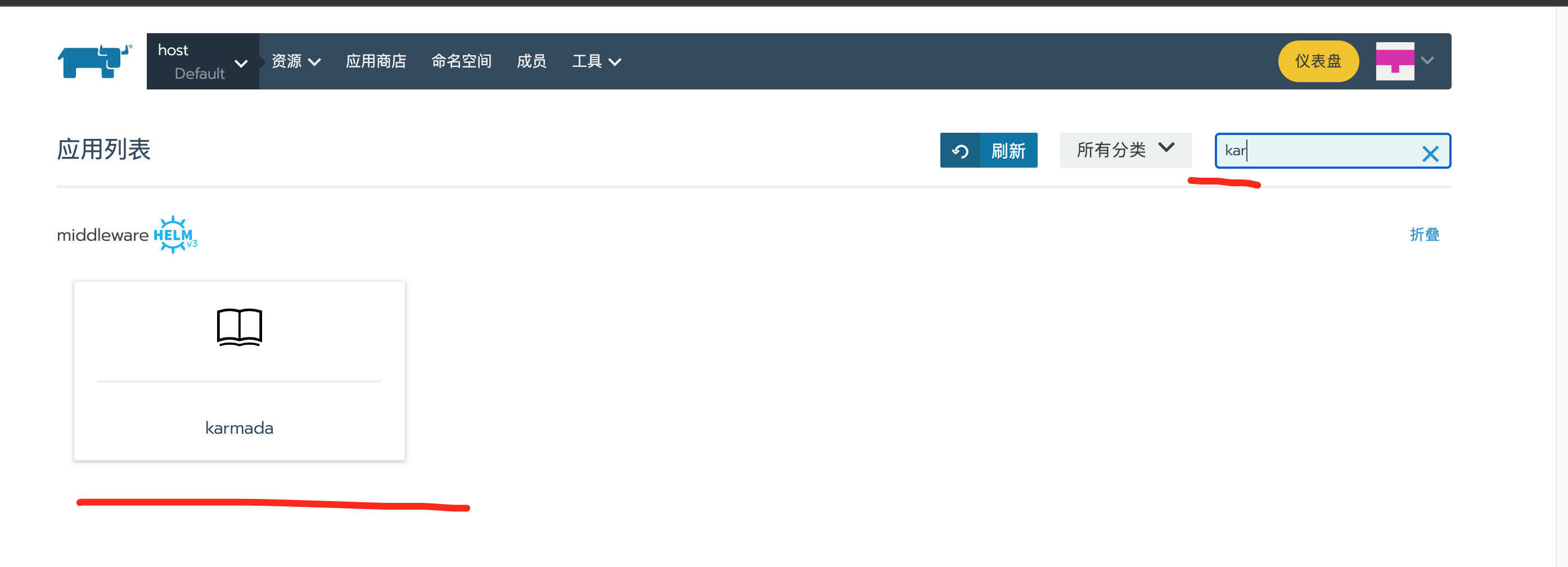

填好对应名称host为karmada,命名空间默认写karmada-system(要和上面证书对应) ,编辑yaml填入对应的应答。然后启动

1

2

3

4

5

6

7

8

9

10kubectl get pods -n karmada -n karmada-system NAME READY STATUS RESTARTS AGE etcd-0 1/1 Running 0 4h9m karmada-aggregated-apiserver-f67d5c5b7-9bqqn 1/1 Running 2 4h9m karmada-apiserver-5cb8b8f46c-8nzcn 1/1 Running 0 4h9m karmada-controller-manager-848998c768-zlmkv 1/1 Running 4 4h9m karmada-kube-controller-manager-658bb465ff-dmqgg 1/1 Running 0 10s karmada-proxy-server-6f78f4b97-nnp9b 1/1 Running 0 4h9m karmada-scheduler-5f54777cc-2s4bl 1/1 Running 0 4h9m karmada-webhook-85b4db8877-q7xc5 1/1 Running 2 4h9m

agent部署

member集群部署名称为karmada-agent,编辑yaml填入对应的应答。然后启动

1 | |

验证karmada部署下发应用

在host执行

1 | |

1 | |

nginx.yaml 模拟下发工作负载

1 |

|

部署

1 |

|

验证pod分发

1 |

|

结果(检验ANP) karmadactl 获取途径

1 |

|

MCS/MCI 功能

submariner-broker

在host集群上面安装,安装submariner所需的crd

填好就可以直接部署

准备参数给operator安装使用,所有参数保持一致,可以根据实际情况修改获取命令。

1 | |

验证

1 | |

submariner-operator

安装在需要打通网络集群上面

1 | |

valus.yaml

1 | |

填好后部署

检查Submariner安装情况

1 | |

1 | |

成功会有√ ,有问题的会有x,要排查问题

1 | |

验证mcs功能

mcs_crd.yaml,分发svi,sve

1 | |

部署

1 | |

应用分发到member1,通过在member2的svc访问到

mcs.yaml

1 | |

部署

1 | |

验证

1 |

|

ingress-nginx

ingress-nginx应答

获取参数karmadaKubeconfig,将karmada-apiserver.config内容 base64 编码

values.yaml

1 | |

验证ingress-nginx

1 | |

mci.yaml

1 | |

1 | |