查看系统版本

1 | |

sshd_config

1 | |

docker

参考

- https://www.jianshu.com/p/f62df4b85761

- https://blog.csdn.net/LG_15011399296/article/details/126119349

https://download.docker.com/linux/static/stable/aarch64/

下载docker二进制压缩包

1 | |

containerd.service

1 | |

docker.socket

1 | |

docker.service

1 | |

启动docker

1 | |

buildx

使用 buildx

示例

1 | |

macOS 或 Windows 系统的 Docker Desktop,以及 Linux 发行版通过 deb 或者 rpm 包所安装的 docker 内置了 buildx,不需要另行安装。 官方安装教程

如果你的 docker 没有 buildx 命令,可以下载二进制包进行安装:

- 首先从 Docker buildx 项目的 release 页面找到适合自己平台的二进制文件。

- 下载二进制文件到本地并重命名为

docker-buildx,移动到 docker 的插件目录~/.docker/cli-plugins。 - 向二进制文件授予可执行权限。

kubectl

1 | |

docker-compose

https://github.com/zhangguanzhang/docker-compose-aarch64/releases

rke

rke从https://github.com/rancher/rke/releases/tag/v1.3.11下载

-

下载rke 镜像时候

要使用

1

docker pull --platform=arm64 镜像名查看需要下载的镜像,注意版本

1

rke config --system-images --all -

检查cni插件

1

2# 看看这个目录是否是空的 ll /opt/cni/bin -

rancher/calico-node不支持arm版本,遇到

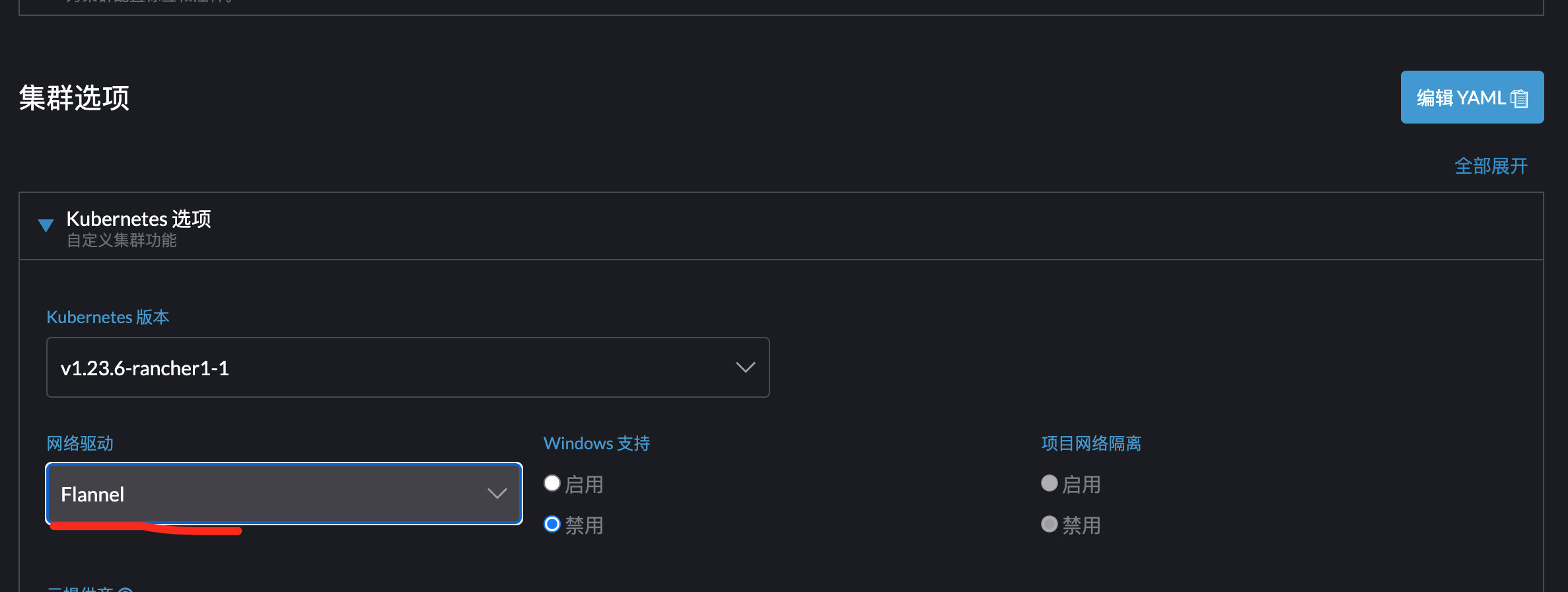

standard_init_linux.go:220: exec user process caused"exec format error"修改cluster.yml网络插件为flannel,默认是canal

1

2

3# 设置flannel网络插件 network: plugin: flannelUI创建集群时候网络驱动要选flannel

-

coredns error

1

2

3

4

5

6

7[INFO] plugin/ready: Still waiting on: "kubernetes" [INFO] plugin/ready: Still waiting on: "kubernetes" [INFO] plugin/ready: Still waiting on: "kubernetes" Still waiting on: "kubernetes" HINFO: read udp 114.114.114.114:53: i/o timeout Still waiting on: "kubernetes" HINFO: read udp 114.114.114.114:53: i/o timeout Still waiting on: "kubernetes" HINFO: read udp 114.114.114.114:53: i/o timeout可能是iptables设置问题,使用节点清除脚本,再重新安装

- https://github.com/coredns/coredns/discussions/4990

- https://github.com/coredns/coredns/issues/2693

-

创建不了worker节点

kubelet 看到大量类似报错

dial tcp 127.0.0.1:6443: connect: connection refused1

2E0106 08:40:08.885824 275376 controller.go:144] failed to ensure lease exists, will retry in 200ms, error: Get "https://127.0.0.1:6443/apis/coordination.k8s.io/v1/namespaces/kube-node-lease/leases/xc-10-81-133-2?timeout=10s": dial tcp 127.0.0.1:6443: connect: connection refusednginx-proxy,某个二进制编译格式不对,导致nginx-proxy没有正常起来,监听6443。

1

2

3

4

5

6

7

8/usr/bin/nginx-proxy: line 4: /usr/bin/confd: cannot execute binary file: Exec format error 2023/01/09 02:47:22 [notice] 9#9: using the "epoll" event method 2023/01/09 02:47:22 [notice] 9#9: nginx/1.21.0 2023/01/09 02:47:22 [notice] 9#9: built by gcc 10.2.1 20201203 (Alpine 10.2.1_pre1) 2023/01/09 02:47:22 [notice] 9#9: OS: Linux 4.19.90-24.4.v2101.ky10.aarch64 2023/01/09 02:47:22 [notice] 9#9: getrlimit(RLIMIT_NOFILE): 1048576:1048576 2023/01/09 02:47:22 [notice] 9#9: start worker processes 2023/01/09 02:47:22 [notice] 9#9: start worker process 10根据这个issue, 拿到编译好的confd,重新commit 出新的镜像

1

2

3

4

5

6

7

8

9

10

11

12

13

14docker pull jerrychina2020/rke-tools:v0.1.78-linux-arm64 # 下面步骤在arm64机器上进行 docker -it --rm --name xxx jerrychina2020/rke-tools:v0.1.78-linux-arm64 # 拿到编译好的confd docker cp xxx:/usr/bin/confd . docker -it --rm --name xxx rancher/rke-tools:v0.1.80 docker cp confd xxx:/usr/bin/confd docker commit xxx rancher/rke-tools:v0.1.80

nfs

1 | |

1 | |

harbor

https://github.com/goharbor/harbor-arm 编译2.3.0,最好使用联网的arm64编译,同时解决一些错漏的地方

根据https://github.com/goharbor/harbor-arm/issues/37

1 | |

才能正常跑

第一次跑make compile_redis ,需要注释掉

1 | |

详细log 通过LOGFILE=/tmp/redis.Ek4eRN/stage/LOGS/redis.log 路径是临时路径

如果要有multi-arch支持,harbor版本必须大于等于2.0.0。同时push时候要用到docker mainfest

例子

https 自签名的话使用--insecure,-p, --purge-镜像 push 成功后删除本地的多架构镜像mainfest

1 | |

- 为不同arch的镜像本地创建清单(指向同一个image:tag 例如myprivateregistry.mycompany.com/repo/image:1.0),而且其他arch镜像也得预先push,不能只是本地镜像,不然创建失败。

- push 实际上只是把清单内容push上去