安装rancher2.6.5 docker 单节点版

准备镜像仓库

必须使用https,而且ssl证书必须有SAN(Subject Alternative Names),不然会报错x509: certificate relies on legacy Common Name field,和go version > 1.15有关,高版本弃用CN(CommonName) 字段

需要创建一个新的有效证书以包含subjectAltName属性,并且应该在使用 openssl 命令创建 SSL 自签名证书时通过指定-addext标志直接添加

1 | |

需要到的镜像

1 | |

1 | |

docker 命令

生成的证书,*key, *crt都放到/root/harbor/cert下面,然后映射到容器的/container/certs目录

1 | |

1 | |

参数详解

加载system-charts,其实默认已经在rancher镜像里面,这个变量告诉 Rancher 使用本地的,而不是尝试从 GitHub 获取它们。

1 | |

Custom CA Root Certificates,参考这里面的 docker配置,这里配置Rancher 需要访问的服务需要用的自签名证书,不然会报错x509: certificate signed by unknown authority

1 | |

配置私有仓库

根据这个Private Registry Configuration, 进到容器里面配置

1 | |

registries.yaml

1 | |

重启容器

1 | |

重新进入容器,然后配置hosts,不然使用域名解析不了,不是配置coredns

1 | |

重启后,要等containd启动,检查containd更新的配置

1 | |

测试拉镜像

1 | |

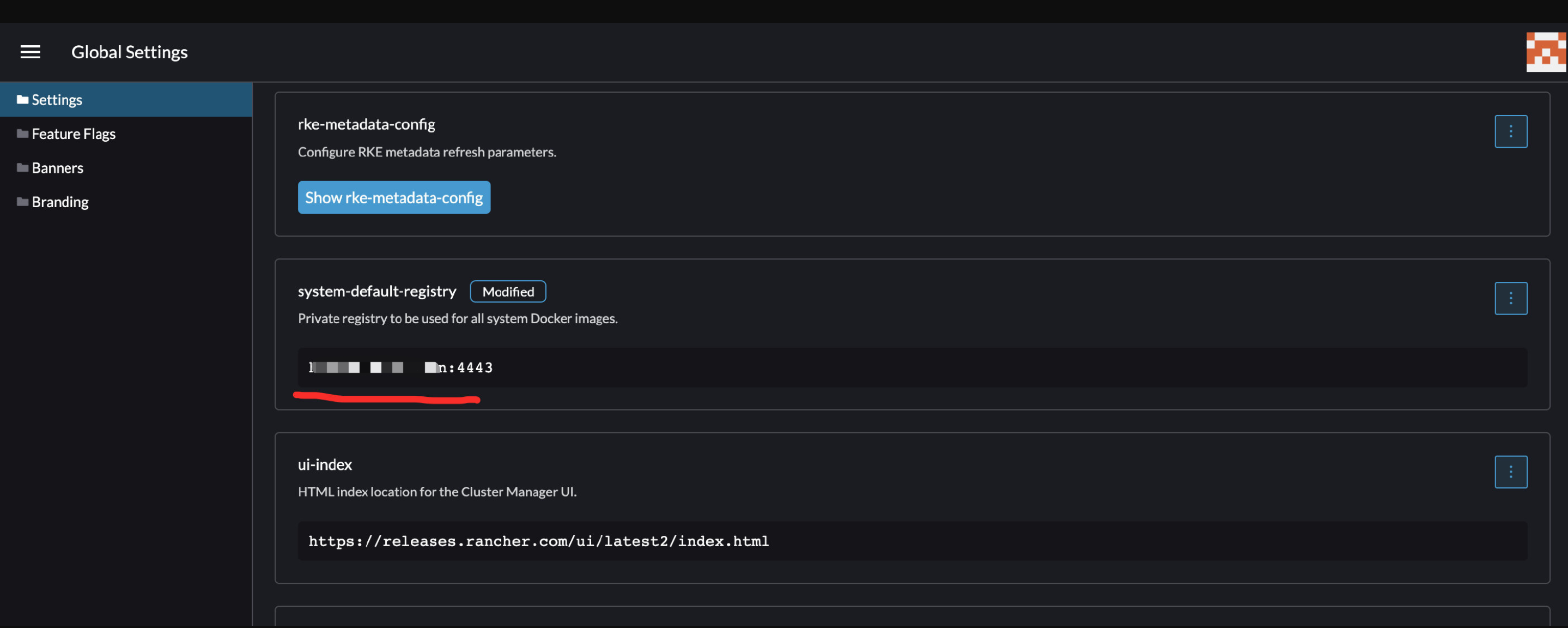

配置system-default-registry

高可用rancher

安装k3s

https://github.com/k3s-io/k3s/releases/tag/v1.23.6%2Bk3s1 里面的**k3s-images.txt **可以看k3s需要的镜像

使用v1.23.6+k3s1创建集群

1 | |

install.sh

1 | |

创建kubeconfig,不然helm会报错

1 | |

配置harbor coredns 持久化

1 | |

重启coredns读配置

1 |

|

安装helm

https://helm.sh/docs/intro/install/

安装cent-manager

cert-manager镜像,docker load 到每个节点,不然要改cert-manager helm chart

1 | |

下载 参考

1 | |

安装

1 | |

验证

1 | |

安装rancher

下载

1 | |

安装,选项详解

1 | |

-

hostname rancher 域名

-

bootstrapPassword:登录密码

-

replicas: rancher副本数

-

useBundledSystemChart: 是否使用system-charts packaged with Rancher server

-

additionalTrustedCAs:信任第三方证书(harbor)配合使用

1

kubectl -n cattle-system create secret generic tls-ca-additional --from-file=ca-additional.pemca-additional.pem是harbor的自签名cert证书,要重命名为ca-additional.pem

安装使用自签名SAN证书

必须使用CA签名,不然纳管agent报错

1 | |

准备openssl.conf 证书生成参考https://www.golinuxcloud.com/openssl-subject-alternative-name/

1 | |

使用自签名证书不用certmanager,要保证hostname CN subjectAltName 一致

1 | |

1 | |

自签名证书安装 Private CA signed certificate , add --set privateCA=true to the command:

1 | |

验证

1 | |

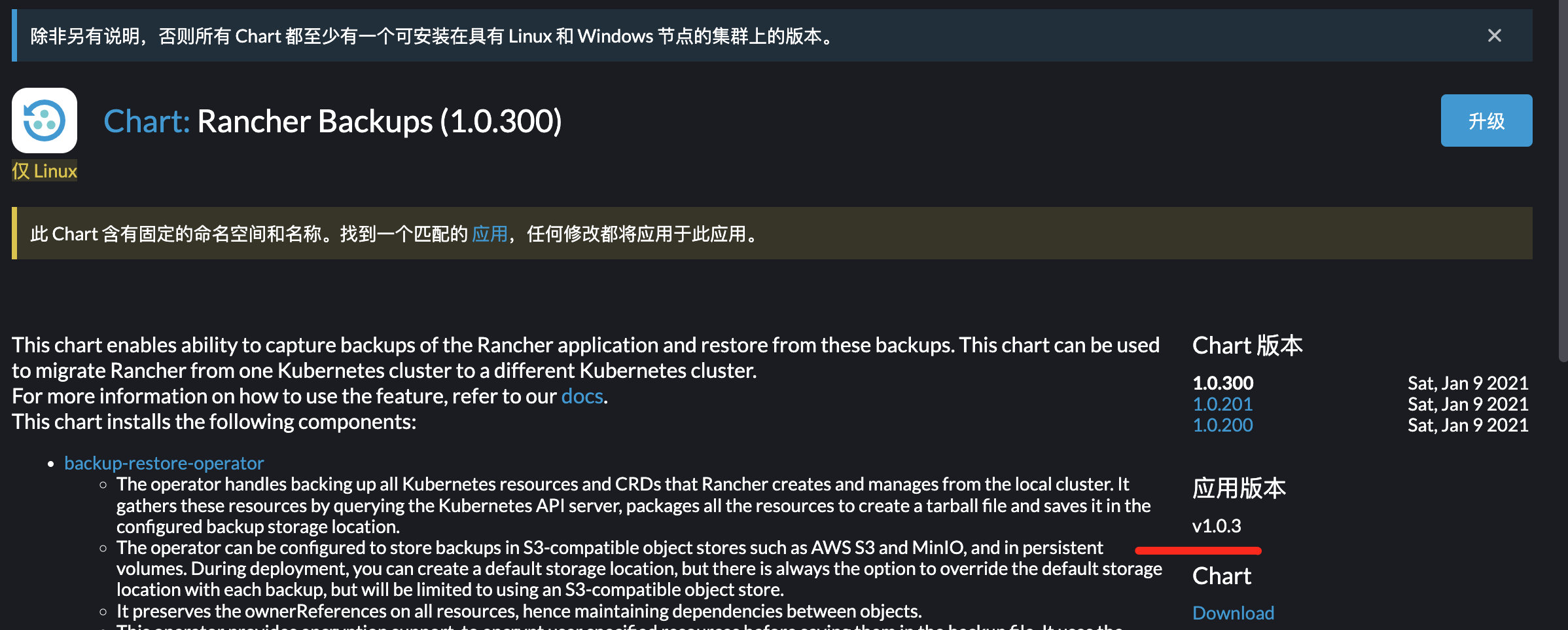

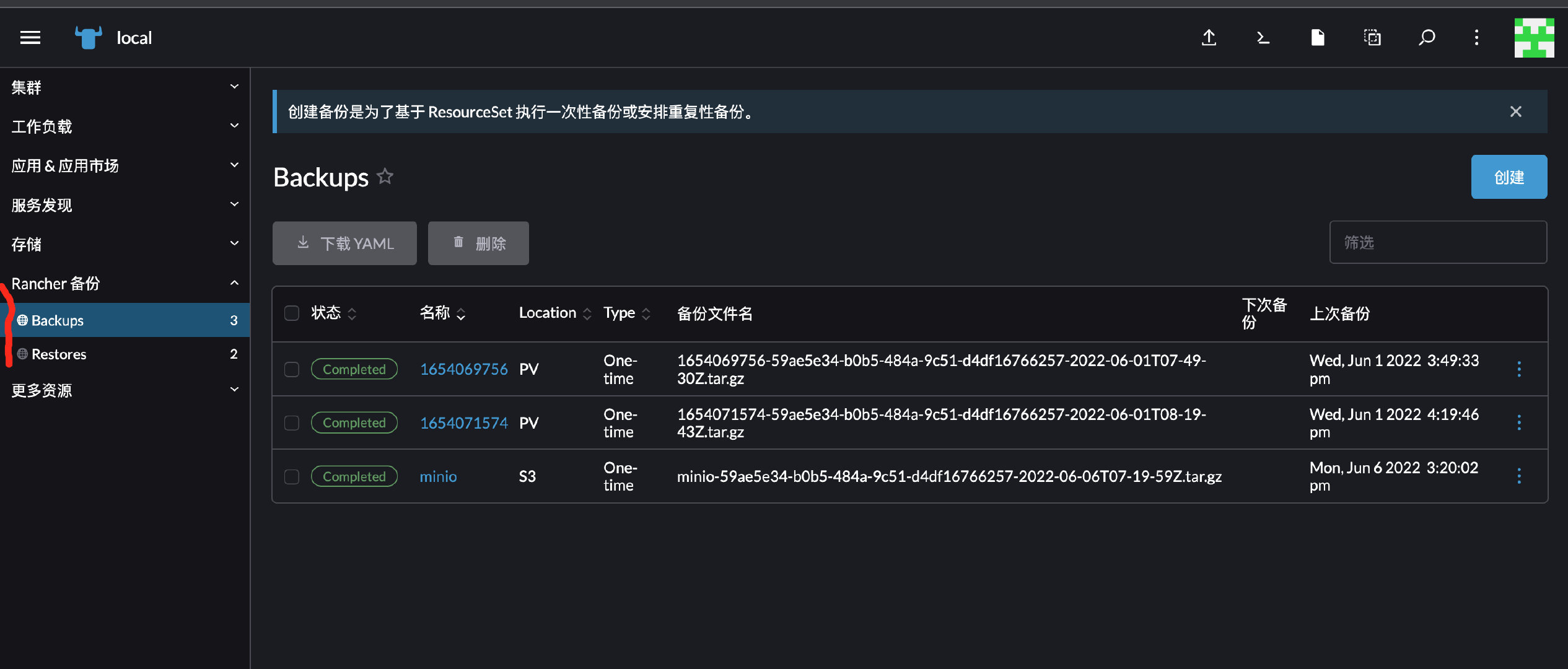

备份还原Rancher Backups (2.1.2)

镜像

1 | |

安装

根据需要安装就行,可以保存到pv或者s3上

-

docker单节点有可能会遇到k8s版本太高,rancher backup 版本太低导致

1

no matches for kind "CustomResourceDefinition" in version "apiextensions.k8s.io/v1beata1"例如

这时候要直接

docker cp适合版本的安装文件到容器里面,使用helm_v3安装。

验证

1 | |

刷新后多出选项

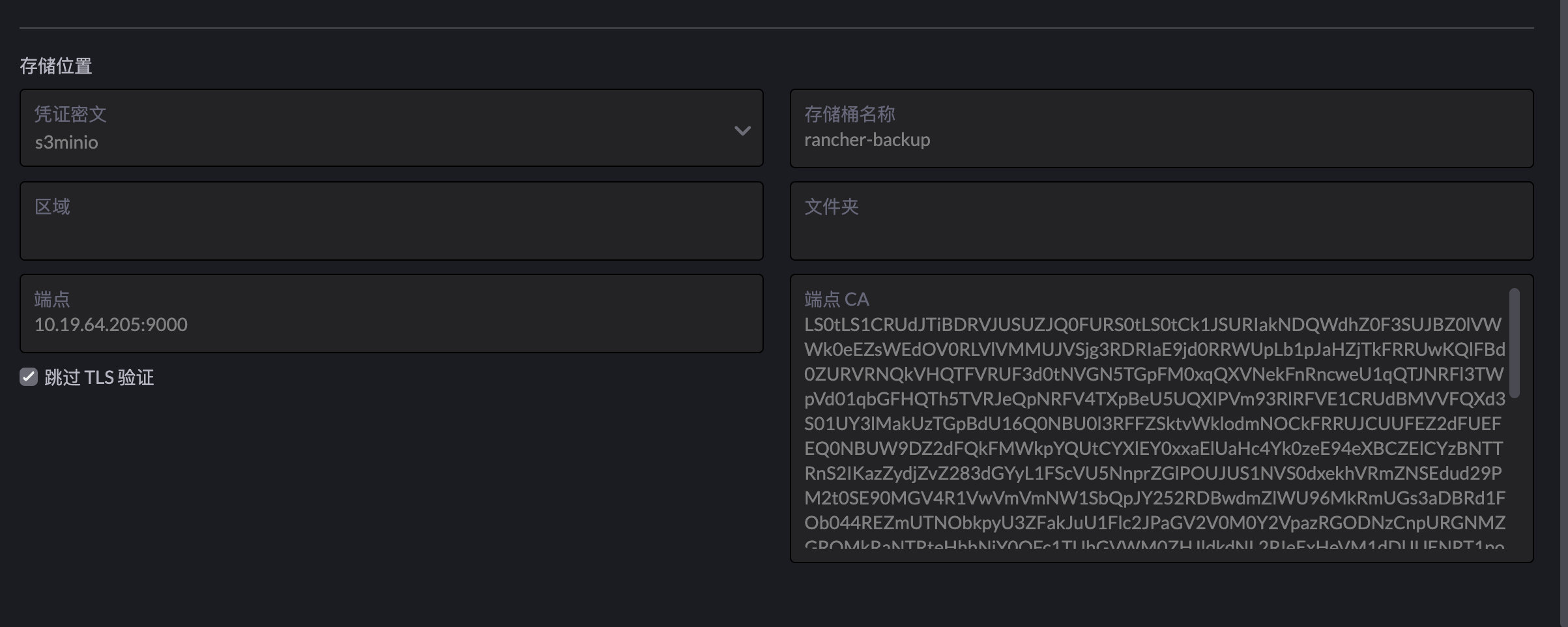

关于minio配置,必须用https

-

在docker目录

/root/.minio对应的挂载卷目录,创建certs目录,对应的密钥和证书重命名成private.keyandpublic.crt,使用SAN完整证书链,加入docker container ip(可以通过 docker log 查看到),server ip1

docker run -d -p 19000:9000 -p 15000:5000 --name minio -e "MINIO_ROOT_USER=admin" -e "MINIO_ROOT_PASSWORD=12345678" -v /data/minio/data:/data -v /data/minio/config:/root/.minio minio/minio server --console-address ":5000" /data开启成功的话

1

2

3

4

5

6

7

8

9

10

11docker restart minio docker logs -f minio WARNING: Detected Linux kernel version older than 4.0.0 release, there are some known potential performance problems with this kernel version. MinIO recommends a minimum of 4.x.x linux kernel version for best performance API: https://172.17.0.3:9000 https://127.0.0.1:9000 Console: https://172.17.0.3:5000 https://127.0.0.1:5000 Documentation: https://docs.min.io Finished loading IAM sub-system (took 0.0s of 0.0s to load data). -

关于账密

创建secret

1

2

3

4

5

6

7

8apiVersion: v1 kind: Secret metadata: name: creds type: Opaque data: accessKey: <Enter your base64-encoded access key> secretKey: <Enter your base64-encoded secret key> -

配置填写

endpoint ca 必须填写使用证书base64后的内容,不要直接用证书明文。

迁移rancher

假设集群A迁移到B,A要先备份,尽量AB集群所用Kubernetes 版本相同,因为不同的版本apiVersion不一样

先备份

无论是docker或者集群安装的rancher,备份必须使用rancher backup operator,而不是参考docker backup,否则使用operator恢复备份文件时候会报错(参考Rancher backup panics when it encounters an invalid tarball)

1 | |

统一参考备份还原Rancher Backups (2.1.2)

minio可能会遇到的问题

检查minio和集群时区使用date,不然minio下载备份数据报错The difference between the request time and the server's time is too large., requeuing

1 | |

迁移流程

下载rancher-backup相关的包

1 | |

在B安装,安装前可以使用这个脚本清理节点

1 | |

values.yaml

1 | |

验证

1 | |

在B创建和A相同的minio secert

1 | |

创建restore自定义资源,在恢复自定义资源中,prune必须设置为 false。endpointCA 证书内容得base64编码

1 | |

验证

1 | |

参考上面安装高可用rancher2.6.5,rancher安装命令hostname和A安装的保持一致

迁移完成后的配置修改

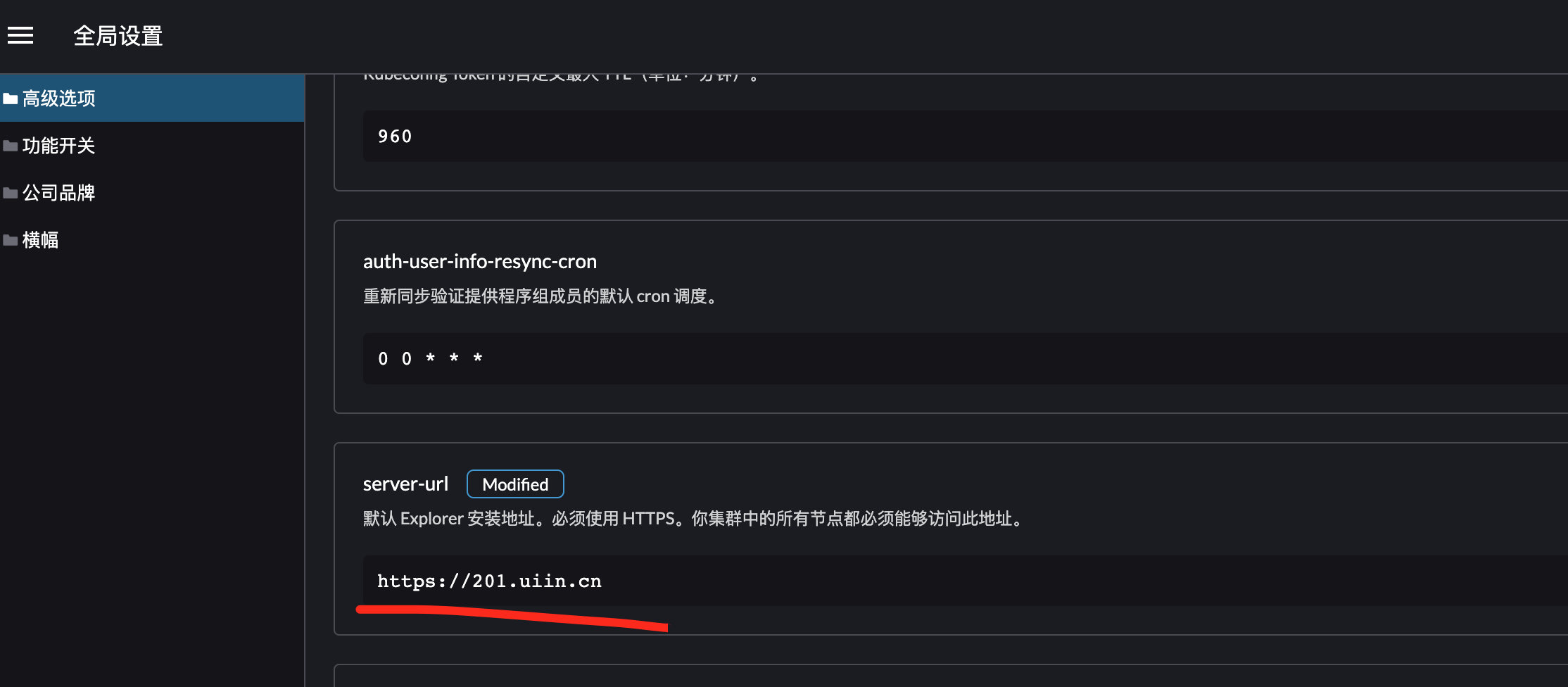

检查server-url是否符合实际情况,和高可用安装时候的hostname一致

针对从docker安装迁移到集群高可用安装的情况,主要是agent的连接从IP变成了域名,所以还需要配置好下游集群的agent

-

获取kubeconfig,参考

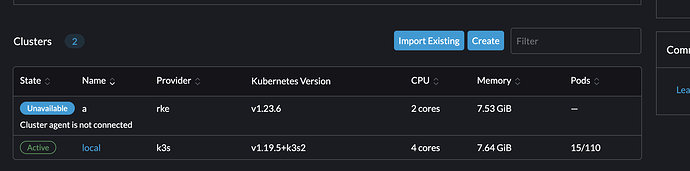

对于导入集群不需要考虑,创建的集群分两种情况

-

有从UI界面获取过kubeconfig,可以通过切换context

1

2kubectl config get-contexts kubectl config use-context [context-name]Example:

1

2

3CURRENT NAME CLUSTER AUTHINFO NAMESPACE * my-cluster my-cluster user-46tmn my-cluster-controlplane-1 my-cluster-controlplane-1 user-46tmn在本例中,当您使用

kubectl第一个上下文时my-cluster,您将通过 Rancher 服务器进行身份验证。使用第二个上下文,

my-cluster-controlplane-1您将使用授权的集群端点进行身份验证,直接与下游 RKE 集群通信。 -

已经对开连接了,就下载不了,可以从下游集群具有

controlplane的节点上生成kubeconfig。参考https://gist.github.com/superseb/b14ed3b5535f621ad3d2aa6a4cd6443b

1

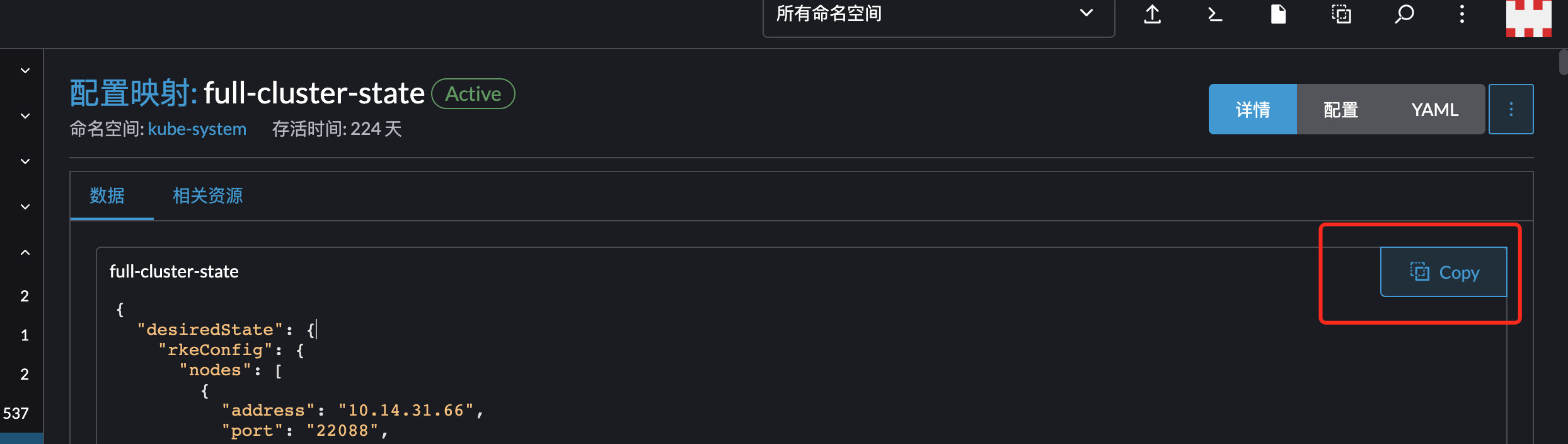

2docker run --rm --net=host -v $(docker inspect kubelet --format '')/ssl:/etc/kubernetes/ssl:ro --entrypoint bash $(docker inspect $(docker images -q --filter=label=io.cattle.agent=true) --format='' | tail -1) -c 'kubectl --kubeconfig /etc/kubernetes/ssl/kubecfg-kube-node.yaml get configmap -n kube-system full-cluster-state -o json | jq -r .data.\"full-cluster-state\" | jq -r .currentState.certificatesBundle.\"kube-admin\".config | sed -e "/^[[:space:]]*server:/ s_:.*_: \"https://127.0.0.1:6443\"_"' > kubeconfig_admin.yaml需要安装jq,比较麻烦。可以把方法拆分

1

2

3

4

5docker exec -it kube-apiserver bash export KUBECONFIG=/etc/kubernetes/ssl/kubecfg-kube-node.yaml kubectl get configmap -n kube-system full-cluster-state -o json > full-cluster-state.json拿到json后,在执行

1

2cat full-cluster-state.json|jq -r .data.\"full-cluster-state\" | jq -r .currentState.certificatesBundle.\"kube-admin\".config | sed -e "/^[[:space:]]*server:/ s_:.*_: \"https://127.0.0.1:6443\"_" > kubeconfig_admin.yaml

-

-

修改coredns

1

kubectl edit cm coredns -n kube-system修改hosts

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24data: Corefile: | .:53 { errors health { lameduck 5s } hosts { xxxx 201.xxxx.cn fallthrough } ready kubernetes cluster.local in-addr.arpa ip6.arpa { pods insecure fallthrough in-addr.arpa ip6.arpa } prometheus :9153 forward . "/etc/resolv.conf" cache 30 loop reload loadbalance } # STUBDOMAINS - Rancher specific change kind: ConfigMapdelete 对应的coredns pod,让hosts起效

1

1

kubectl rollout restart deployment coredns -n kube-system -

修改agent配置

1

kubectl edit deploy cattle-cluster-agent -n cattle-system修改CATTLE_SERVER,改成新的域名,同时找到挂载的secret

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16containers: - env: ... - name: CATTLE_IS_RKE value: "true" - name: CATTLE_SERVER value: https://xxx.xxx.cn #修改 ..... image: rancher/rancher-agent:v2.6.5 ..... volumes: - name: cattle-credentials secret: defaultMode: 320 secretName: cattle-credentials-cdcb52a #复制执行

1

kubectl edit secret -n cattle-system cattle-credentials-cdcb52a修改url,填入CATTLE_SERVER值的base64编码

1

2

3

4

5

6

7

8apiVersion: v1 data: namespace: xxxx token: xxx url: aHR0cHM6Ly8yMDEudWlpbi5jbg== # 修改 kind: Secret ..... type: Opaque最后delete cattle-cluster-agent对应的pod

1

kubectl rollout restart deployment cattle-cluster-agent -n cattle-system

升级rancher2.5.5-2.6.5

单机版

主要使用--volumes-from实现容器间数据共享

1 | |

回滚

1 | |

高可用

升级rancher,rancher依赖cert-manager的crd,所以最好先找到升级版本的rancher对应的cert-manager,cert-manager也要升级

例如rancher 2.6.5依赖cert-manager 1.7,参考Install/Upgrade Rancher on a Kubernetes Cluster

备份

备份可以参考上面,或者看https://docs.rancher.cn/docs/rancher2.5/backups/back-up-rancher/_index/

1 | |

升级cert-manager

1 | |

1 | |

1 | |

升级rancher

1 | |

自定义集群重新以导入方式纳管

根据文档描述,直接UI界面删除自定义集群,会造成k8s的组件也会被删除,所以不能直接界面操作

场景:A rancher的自定义下游集群想迁移到B上(AB可以一样)

-

B先要创建导入集群,得到部署命令行,拿到对应的yaml

-

kubectl delete -f xxx.yaml相当于删掉与A的连接,删除后kubectl会失效1

2kubectl get pods -A error: You must be logged in to the server (Unauthorized) -

重新获取kubeconfig 参考这个里面关于从kube-apiserver容器里面获取kubeconfig

-

使用新的kubeconfig,执行B的导入纳管命令

重新使用rke管理集群

使用key full-cluster-state的内容创建cluster.rkestate,用于rke命令操作集群

1 | |

根据集群信息编写cluster.yml,完整示例

1 | |

尽量寻找支持该kubernetesVersion的rke版本,只要前面v1.19.16-rancher1一样就可以。

cluster.rkestate和cluster.yml要在同一个目录,处理完这两个文件后,使用rke来操作了。同时保证cluster.rkestate真实反映集群节点现状

1 | |

遇到问题

-

1

Can't retrieve Docker Info: error during connect: Get "http://%2Fvar%2Frun%2Fdocker.sock/v1.24/info": Unable to access the Docker socket (/var/run/docker.sock). Please check if the configured user can execute `docker ps` on the node, and if the SSH server version is at least version 6.7 or higher. If you are using RedHat/CentOS, you can't use the user `root`. Please refer to the documentation for more instructions. Error: ssh: rejected: administratively prohibited (open failed)新增用户

1

2

3

4

5

6# 所有机器 新增rancher用户,添加到docker组(rke安全限制) useradd rancher -G docker echo "123456" | passwd --stdin rancher ssh-keygen ssh-copy-id -i ~/.ssh/id_rsa.pub rancher@192.168.0.22根据节点修改cluster.yml的ssh_key_path和user

1

2

3

4

5

6

7nodes: - address: xxxx user: rancher role: ["controlplane", "etcd", "worker"] ssh_key_path: /home/rancher/.ssh/id_rsa port: 22

端口开放准备

k3s

入站规则

| Protocol | Port | Source | Description |

|---|---|---|---|

| TCP | 80 | Load balancer/proxy that does external SSL termination | Rancher UI/API when external SSL termination is used |

| TCP | 443 | server nodesagent nodeshosted/registered Kubernetesany source that needs to be able to use the Rancher UI or API | Rancher agent, Rancher UI/API, kubectl |

| TCP | 6443 | K3s server nodes | Kubernetes API |

| UDP | 8472 | K3s server and agent nodes | Required only for Flannel VXLAN. |

| TCP | 10250 | K3s server and agent nodes | kubelet |

出站规则

| Protocol | Port | Destination | Description |

|---|---|---|---|

| TCP | 22 | Any node IP from a node created using Node Driver | SSH provisioning of nodes using Node Driver |

| TCP | 443 | git.rancher.io | Rancher catalog |

| TCP | 2376 | Any node IP from a node created using Node driver | Docker daemon TLS port used by Docker Machine |

| TCP | 6443 | Hosted/Imported Kubernetes API | Kubernetes API server |

RKE

节点间流量规则

| Protocol | Port | Description |

|---|---|---|

| TCP | 443 | Rancher agents |

| TCP | 2379 | etcd client requests |

| TCP | 2380 | etcd peer communication |

| TCP | 6443 | Kubernetes apiserver |

| TCP | 8443 | Nginx Ingress’s Validating Webhook |

| UDP | 8472 | Canal/Flannel VXLAN overlay networking |

| TCP | 9099 | Canal/Flannel livenessProbe/readinessProbe |

| TCP | 10250 | Metrics server communication with all nodes |

| TCP | 10254 | Ingress controller livenessProbe/readinessProbe |

入站规则

| Protocol | Port | Source | Description |

|---|---|---|---|

| TCP | 22 | RKE CLI | SSH provisioning of node by RKE |

| TCP | 80 | Load Balancer/Reverse Proxy | HTTP traffic to Rancher UI/API |

| TCP | 443 | Load Balancer/Reverse ProxyIPs of all cluster nodes and other API/UI clients | HTTPS traffic to Rancher UI/API |

| TCP | 6443 | Kubernetes API clients | HTTPS traffic to Kubernetes API |

出站规则

| Protocol | Port | Source | Description |

|---|---|---|---|

| TCP | 443 | 35.160.43.145,35.167.242.46,52.33.59.17 |

Rancher catalog (git.rancher.io) |

| TCP | 22 | Any node created using a node driver | SSH provisioning of node by node driver |

| TCP | 2376 | Any node created using a node driver | Docker daemon TLS port used by node driver |

| TCP | 6443 | Hosted/Imported Kubernetes API | Kubernetes API server |

| TCP | Provider dependent | Port of the Kubernetes API endpoint in hosted cluster | Kubernetes API |

RKE2

入站规则

| Protocol | Port | Source | Description |

|---|---|---|---|

| TCP | 9345 | RKE2 agent nodes | Kubernetes API |

| TCP | 6443 | RKE2 agent nodes | Kubernetes API |

| UDP | 8472 | RKE2 server and agent nodes | Required only for Flannel VXLAN |

| TCP | 10250 | RKE2 server and agent nodes | kubelet |

| TCP | 2379 | RKE2 server nodes | etcd client port |

| TCP | 2380 | RKE2 server nodes | etcd peer port |

| TCP | 30000-32767 | RKE2 server and agent nodes | NodePort port range |

| TCP | 5473 | Calico-node pod connecting to typha pod | Required when deploying with Calico |

| HTTP | 8080 | Load balancer/proxy that does external SSL termination | Rancher UI/API when external SSL termination is used |

| HTTPS | 8443 | hosted/registered Kubernetesany source that needs to be able to use the Rancher UI or API | Rancher agent, Rancher UI/API, kubectl. Not needed if you have LB doing TLS termination. |

通常允许所有出站流量。

总结

常用端口

1 | |

网络插件端口,默认使用Canal

-

WAVE插件 TCP 6783 UDP 6783-6784

-

Calico插件 TCP 179,5473 UDP 4789

-

Cilium插件 TCP 8472,4240

端口开放检测脚本

1 | |

端口测试

测试命令

1 | |

-

tcp/6443

-

tcp/2380,2379

1

2kubectl get pods -A Error from server: etcdserver: request timed out

-

udp/8472

pod 正常,dns解析 访问pod ip都有问题

1

2

3

4

5

6

7/ # nslookup default-http-backend ;; connection timed out; no servers could be reached / # wget 10.43.85.213 Connecting to 10.43.85.213 (10.43.85.213:80) wget: can't connect to remote host (10.43.85.213): Operation timed out -

tcp/10254 nginx-ingress-controller 起不来

1

2

3

4

5kubectl get pods -n ingress-nginx NAME READY STATUS RESTARTS AGE default-http-backend-6db58c58cd-bfk2h 1/1 Running 0 4h27m nginx-ingress-controller-lrx64 1/1 Running 0 4h13m nginx-ingress-controller-ztww2 0/1 CrashLoopBackOff 6 4h27m -

tcp/9099 canal 运行有问题

1

2

3

4

5kubectl get pods -n kube-system NAME READY STATUS RESTARTS AGE calico-kube-controllers-5898bd695c-cgl6f 1/1 Running 0 4h35m canal-2mwgg 1/2 Running 1 4h35m canal-ffn6k 2/2 Running 1 4h21m -

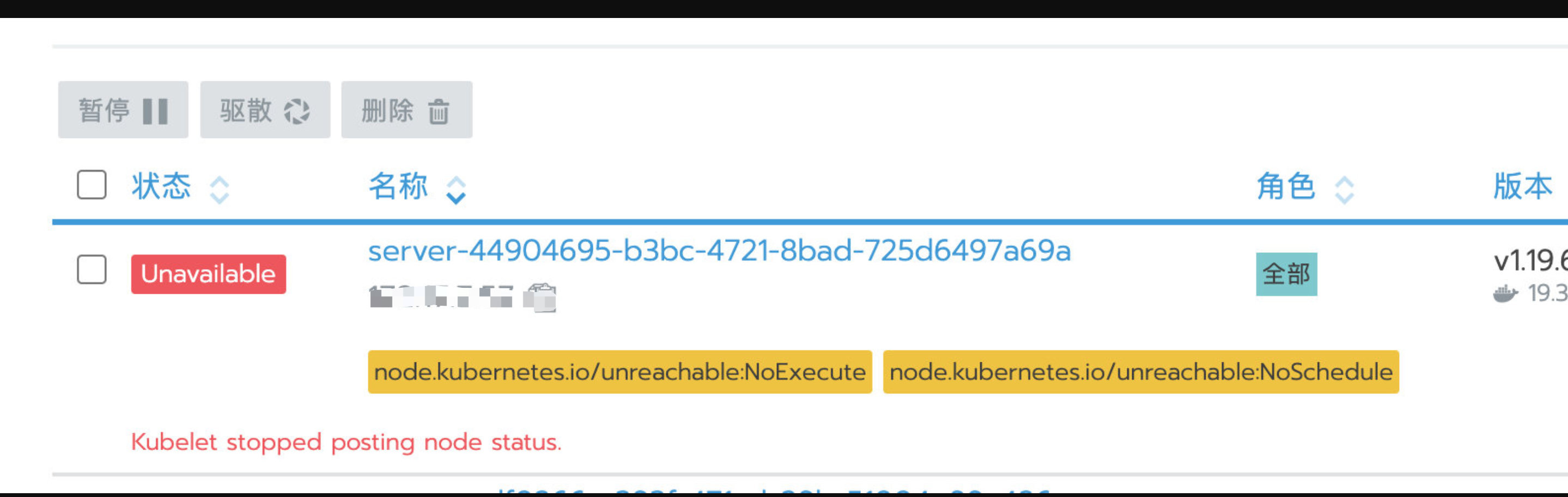

tcp/10250 Metrics server communication with all nodes

节点CPU 内存会变成N/A rancher看log会看不了

1

2kubectl logs -f --tail 200 xxx-5768967f5c-wmc2k -n xxx Error from server: Get "https://xxx:10250/containerLogs/xxx/xxx-5768967f5c-wmc2k/xxx?follow=true&tailLines=200": dial tcp xxxxx:10250: connect: no route to host

rancher error 处理

Failed to pull image “xxx”: rpc error: code = Unknown desc = Error response from daemon: pull access denied for xxx, repository does not exist or may require ‘docker login’

pod莫名其妙拉不了镜像,宿主机可以拉,推测集群有问题,而集群靠kubelet来管理容器

1 | |

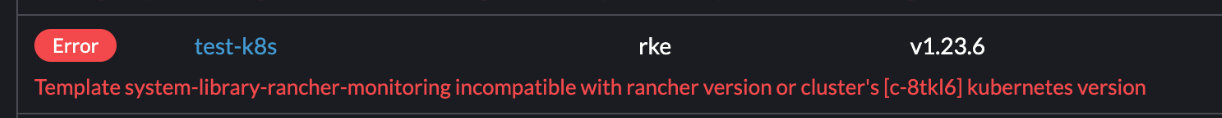

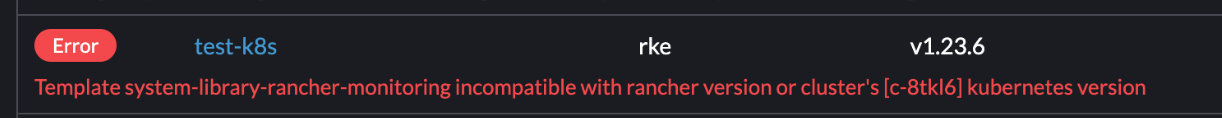

template system-library-rancher-monitoring 和kubeversion 不匹配

template system-library-rancher-monitoring incompatible with rancher version or cluster’s [xxx] kubernetes version

参考 https://github.com/rancher/rancher/issues/37039#issuecomment-1176320933

-

迁移相关,看是不是开启了v1版本的monitor,新版rancher用的是v2

1

2

3# 设置检查集群配置 enable_cluster_alerting: false enable_cluster_monitoring: false或者使用这个脚本检查,是否存在迁移,自定义证书要加

--insecure参数得到

1

The Monitoring V1 operator does not appear to exist in cluster *******. Migration to Monitoring V2 should be possible.应该没啥问题

-

检查

system-library-rancher-monitoring这个 cr的内容1

kubectl edit catalogtemplates system-library-rancher-monitoring修改versions的第一项

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16spec: catalogId: system-library defaultVersion: 0.3.2 description: Provides monitoring for Kubernetes which is maintained by Rancher 2. displayName: rancher-monitoring folderName: rancher-monitoring icon: https://coreos.com/sites/default/files/inline-images/Overview-prometheus_0.png projectURL: https://github.com/coreos/prometheus-operator versions: - digest: 08fbaee28d5a0efb79db02d9372629e2 externalId: catalog://?catalog=system-library&template=rancher-monitoring&version=0.3.2 kubeVersion: < 1.22.0-0 # 这个地方 改成 '>=1.21.0-0' rancherMinVersion: 2.6.1-alpha1 version: 0.3.2 versionDir: charts/rancher-monitoring/v0.3.2 versionName: rancher-monitoring

Template system-library-rancher-monitoring incompatible with rancher version or cluster’s [local] kubernetes version

最后我通过编辑clusters.management.cattle.io CR conditions,里面的报错来修复它,因为即使我卸载了 system-monitor ,错误仍然存在。

Example

1 | |

从system-library-rancher-monitoring获取相关的参数

1 | |

通过阅读源码,做了一些其他debug,其实是不可能会报错的,所以直接改cluster的cr也不会重新报错。

1 | |

could not find tenant ID context deadline exceeded

创建微软云凭证时候报错,凭证有效能用,POST /meta/aksCheckCredentials时候,报错。

1 | |

通过错误提示,找到并阅读源码,goCtx用于控制请求超时时间,报错也和时间有关。

1 | |

函数作用

- 调用azure sdk访问azure http api,验证cred。

排查方向

- 网络方面真的超时,通过debug,大概摸清访问的url,参数等,尝试请求发现可以访问,响应也快。

- 通过debug怀疑到时区问题引发的问题

系统默认是UTC,通过把时区设置成当地时区,并且reboot机器问题解决,只是restart rancher pod不起效

1 | |

下游集群重新导入Cluster agent is not connected

agent和rancher完全没有具体报错,agent卡在Connecting to proxy

1 | |

解决方法:修改rancher 集群信息AgentDeployed

1 | |

修改集群信息

1 | |

重置agent部署状态AgentDeployed

1 | |

清楚原来agent,然后重新运行导入命令就可以了

迁移到2.6.7

需要镜像

1 | |

helm升级

1 | |

docker升级

升级后

修改agent配置,那块只需要改secret,不需要改agent deploy