kubeflow 安装步骤

根据kubeflow/mainfests上面的流程安装1.4.1版本

最好安装顺序步骤来一个个组件装,注意kustomize版本和Kubernetes版本

私有化部署

尽量获取kubeflow里面的镜像地址

1 | |

下载image push到私有仓库

1 | |

1 | |

istio/proxy要另外push不能直接全部放到

私有化镜像

需要到的镜像在images.txt 由get_images.sh生成+额外缺的包

通过push_images.sh拉取和push到私有仓库

生成manifests.yaml并修改

1 | |

使用修改好的manifests.yaml部署

1 | |

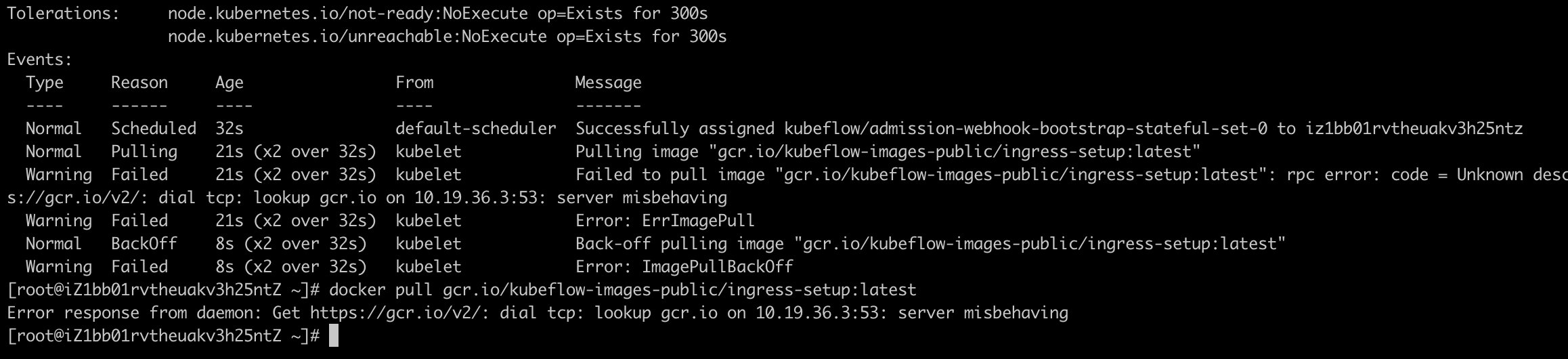

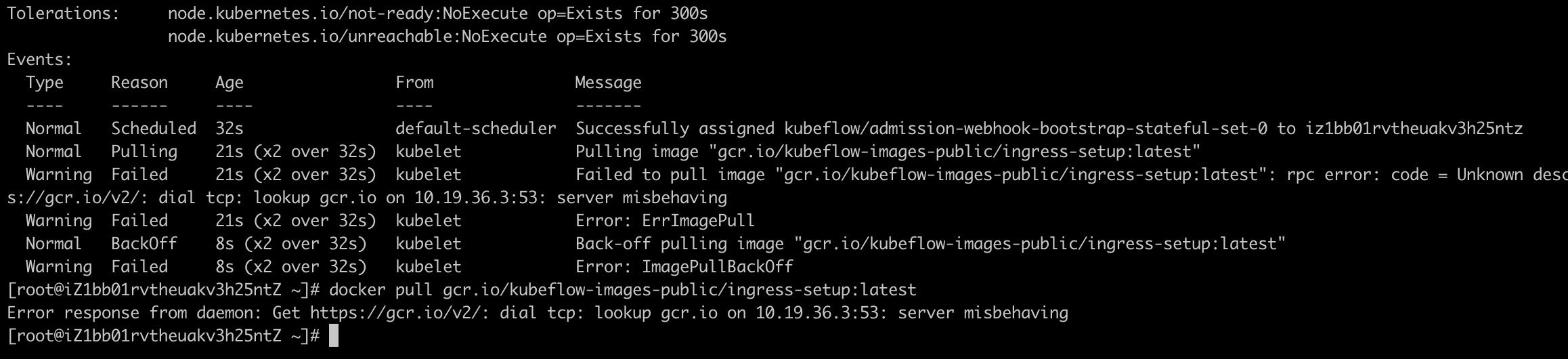

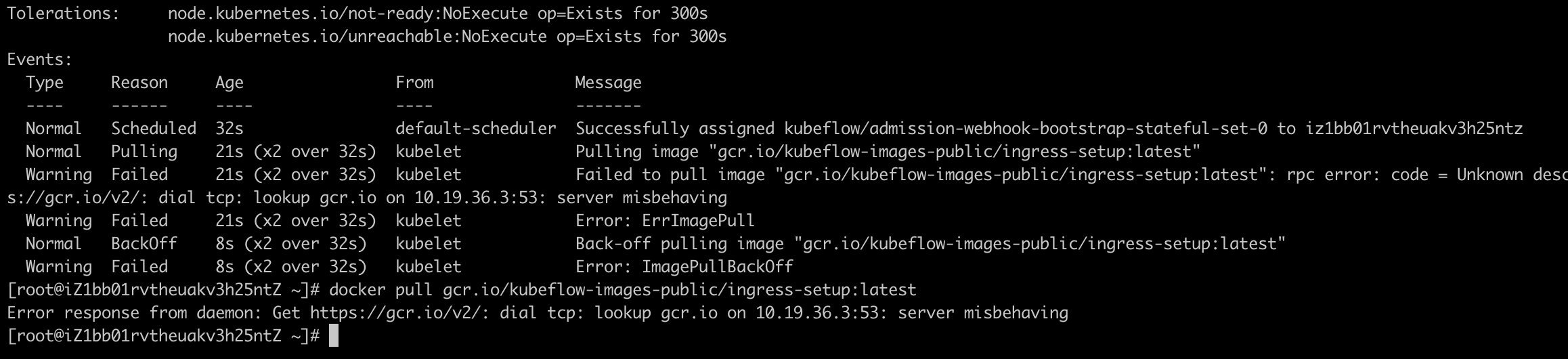

遇到的问题

gcr.io/arrikto/kubeflow/oidc-authservice:28c59efpush到私有后拉不下来

需要修改仓库地址

-

带sha256的镜像私有化后sha会改变拉不下来

-

层级太多不指定项目名pull不了,即使push到library项目里面

解决

从images.txt到push tag转换由push_img.sh解决,manifest.yaml目前手动替换对应镜像名

| images.txt | push tag | manifest.yaml |

|---|---|---|

| docker.io/istio/proxyv2:1.9.6 | xxx:xx/library/docker.io/istio/proxyv2:1.9.6 | library/docker.io/istio/proxyv2:1.9.6 |

| gcr.io/knative-releases/knative.dev/eventing/cmd/broker/filter@sha256:0e25aa1613a3a1779b3f7b7f863e651e5f37520a7f6808ccad2164cc2b6a9b12 | xxx:xx/library/gcr.io/knative-releases/knative.dev/eventing/cmd/broker/filter:kf-manifests-1.4.1 | library/gcr.io/knative-releases/knative.dev/eventing/cmd/broker/filter:kf-manifests-1.4.1 |

kfserving

需要修改ConfigMap inferenceservice-config

image必须写完成路径xxx.xxx.xx.203:8080/library/pytorch/torchserve-kfs不然pull不了

安装遇到的问题

service “istio-galley” not found

1 | |

有可能是卸载不干净

1 | |

再apply

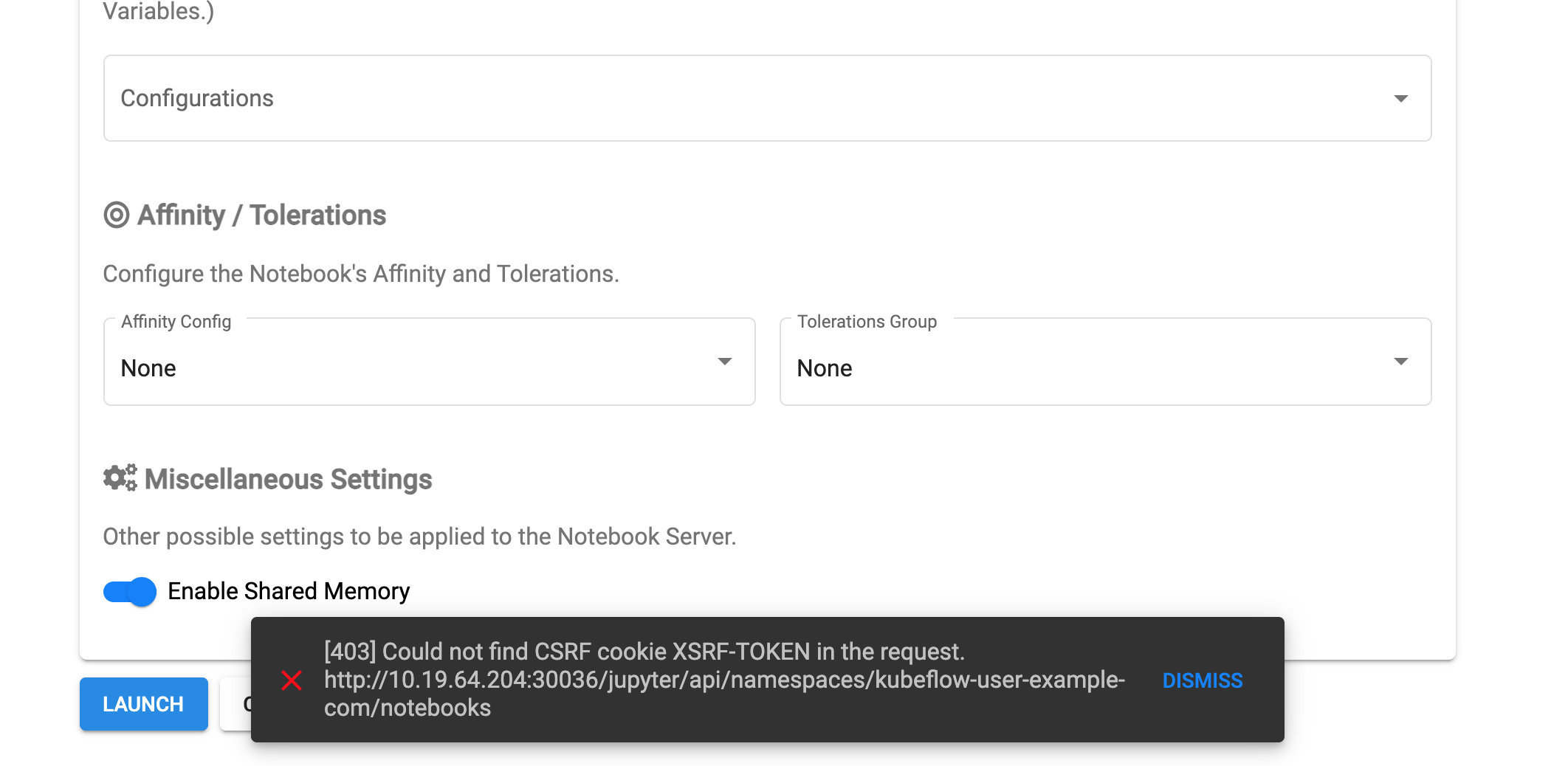

http设置

已经在mainfests.yml改了

- centraldashboard

- jupyter-web-app-deployment

- katib-ui

- kfserving-models-web-app

- ml-pipeline-ui

- tensorboards-web-app-deployment

- volumes-web-app-deployment

在这几个名字的Deployment里面添加env参数

1 | |

https参考

- https://github.com/kubeflow/kubeflow/issues/5803

- https://github.com/kubeflow/manifests/pull/1819/files

安装到k8s1.19遇到TokenRequest的问题

版本:rke安装k8s-1.19.6、kubeflow-1.4

istio组件安装时报错

💡 MountVolume.SetUp failed for volume “istio-token” : failed to fetch token: the API server does not have TokenRequest endpoints enabled

执行下面脚本发现是集群没有TokenRequest

1 | |

解决方式

设置变量jwtPolicy

values.global.jwtPolicy=first-party-jwt

参考:https://stackoverflow.com/questions/64641078/how-to-install-kubeflow-on-existing-on-prem-kubernetes-cluster

1.6版本修改不了docker.io/istio/proxyv2:1.14.1地址

只能pull,然后手动改一下tag

1 | |

1.6部署InferenceService私有仓库拉不了镜像

1 | |

CR ClusterServingRuntime不能使用域名缺省,不然会报错

1 | |

要这样修改

1 | |

x509的问题参考

1 | |

跳过校验

1 | |

修改完后重新部署InferenceService

multi-user 问题

- https://v1-4-branch.kubeflow.org/docs/components/multi-tenancy/getting-started/

新增用户

add_profile.yaml

1 | |

1 | |

生成hash需要pip install passlib bcrypt

1 | |

输入密码回车就可以得到需要的hash

最后重启下dex对应的pod

开发相关

自定义Jupyter镜像

自定义Jupyter镜像 因为每个人需要的包版本不一样

pipeline sdk

暂时不要用v2版本的,kubeflow会报错

1 | |

kfserving

需要使用py3.9不然安装ray[serve]==1.5.0依赖会报错

fairing

1 | |

根本安装不了,依赖冲突, 还有不停安装同个包不同版本的问题。一句话根本没法装

1 | |

kubeflow-fairing有多个依赖包,kfserving比较新。其他包好老(有维护但是不release),fairing本身也没有兼容最新的kfserving。

主要是k8s client从11.xx开始从swagger_types换成openapi_types,而其他包也没有跟上

- https://github.com/kubeflow/training-operator/pull/1143 ( Kubeflow Training Operator )

- AttributeError: ‘V1TFJob’ object has no attribute ‘openapi_types’

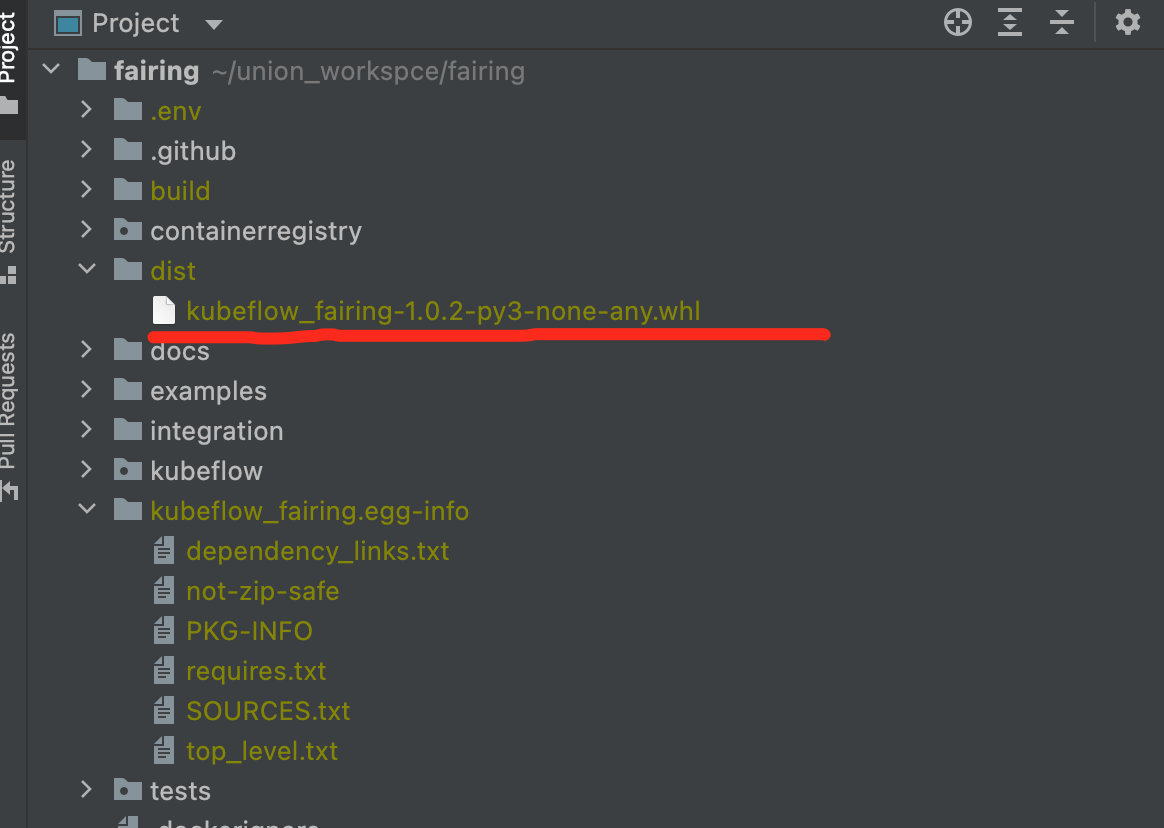

使用自己维护fairing的adapt-latest-kfserving-and-training-oprator分支打包whl包

1 | |

可以在项目的dist目录找到对应的whl文件

然后使用pip install xxx.whl就可以安装

模型状态判断

-

正常

1

2

3

4

5

6

7

8"conditions": [ ...... { "lastTransitionTime": "2022-01-27T01:49:37Z", "status": "True", "type": "Ready" } ] -

异常

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19"conditions": [ { "lastTransitionTime": "2022-01-27T01:54:52Z", "message": "Revision \"test-sklearn-predictor-default-00001\" failed with message: 0/3 nodes are available: 1 Insufficient memory, 3 Insufficient cpu..", "reason": "RevisionFailed", "severity": "Info", "status": "False", "type": "PredictorRouteReady" }, { "lastTransitionTime": "2022-01-27T01:54:52Z", "message": "Configuration \"test-sklearn-predictor-default\" does not have any ready Revision.", "reason": "RevisionMissing", "status": "False", "type": "Ready" } ]遍历conditions if reason==”RevisionFailed” 就是异常,然后拿它message

-

部署中

其他情况都是部署中

要查看kserving里面赋值conditions的代码,主要看InferenceServiceStatus.PropagateStatus方法

1 | |

一直追踪函数流程,简化一下(其实有点想不明白,最后两个值为什么这样赋值,变量名好奇怪,感觉好像反了)

| 根据component会不一样 | serviceStatus 来自于knative client |

|---|---|

| PredictorReady | Ready |

| PredictorRouteReady | ConfigurationsReady |

| PredictorConfigurationReady | RoutesReady |

比较顶级api 先调用knative创建上面的组件(components)knative,然后在创建ingress相关的setting IngressReady

1 | |