一般调试手法

1 | |

1 | |

步骤

-

查看 resolv.conf 文件的内容

1

kubectl exec -ti dnsutils -- cat /etc/resolv.conf -

错误表示 CoreDNS (或 kube-dns)插件或者相关服务出现了问题

1

kubectl exec -i -t dnsutils -- nslookup kubernetes.default -

检查 DNS Pod 是否运行

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26# 查看coredns运行情况 kubectl get pods --namespace=kube-system -l k8s-app=kube-dns NAME READY STATUS RESTARTS AGE coredns-95db45d46-5wggq 1/1 Running 1 (86s ago) 25h coredns-95db45d46-8ss4w 1/1 Running 1 (86s ago) 25h # coredns logs kubectl logs --namespace=kube-system -l k8s-app=kube-dns # 检查是否启用了 DNS 服务 kubectl get svc --namespace=kube-system NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP,9153/TCP 72d # 检查endpoints的pod ip kubectl get endpoints kube-dns -n kube-system NAME ENDPOINTS AGE kube-dns 10.1.0.81:53,10.1.0.82:53,10.1.0.81:53 + 3 more... 72d # 检查coredns cm kubectl -n kube-system edit configmap coredns -

测试服务正常

1

2

3

4# 是否解析正常,你的服务在正确的名字空间 kubectl exec -it dnsutils -- nslookup <service-name> kubectl exec -it dnsutils -- nslookup <service-name>.<namespace> kubectl exec -it dnsutils -- nslookup <service-name>.<namespace>.svc.cluster.local -

检查pod和service的DNS映射

就是workload和service,有没有做一些奇奇怪怪的配置,检查pod的FQDN,DNS配置,搜索域限制等等

bridge-nf-call-iptables被修改了

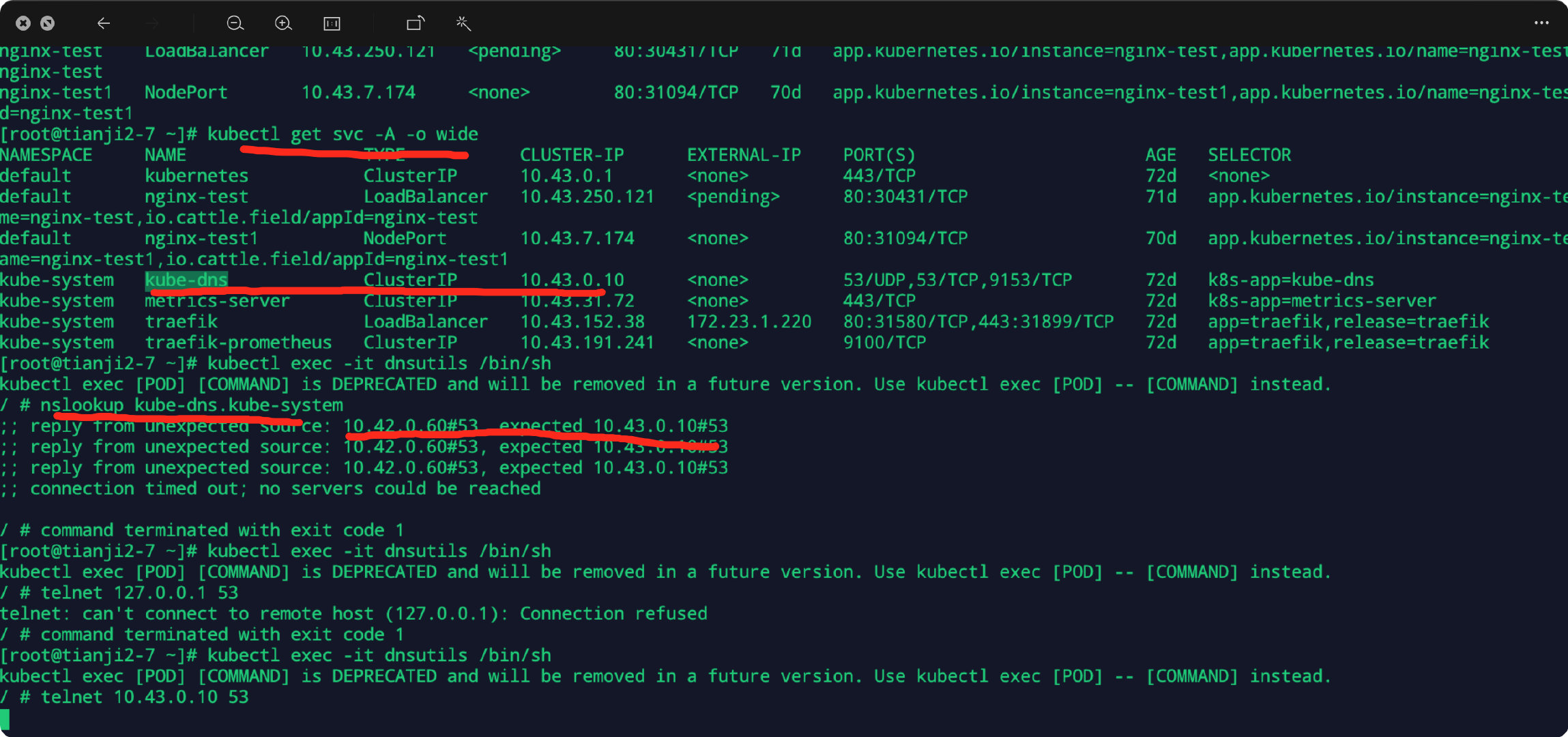

域名解析失败 ,coredns运行正常,连接svc timeout,通过ip连接没有问题。

1 | |

1 | |

说明cluster-ip到pod-ip,iptable有问题,再检查一下endpoints,对应关系没问题。那就是iptable有问题。如果用k8s,还有检查一下kube-proxy。我用的是k3s。

centos

1 | |

I’m run running kubernetes 1.8.0 on ubuntu16 to get rid of “reply from unexpected source” error you have to :

modprobe br_netfilterhttp://ebtables.netfilter.org/documentation/bridge-nf.html

For CentOS, I fixed this issue using: echo ‘1’ > /proc/sys/net/bridge/bridge-nf-call-iptables

The problem is that I did it before, it was working and for some reason it changed back to 0 again after a while. I will have to keep monitoring.

k3s server agent 启动会自动配置内核参数,目测参数改了。

1 | |

为什么 kubernetes 环境要求开启 bridge-nf-call-iptables

启用 bridge-nf-call-iptables 这个内核参数 (置为 1),表示 bridge 设备在二层转发时也去调用 iptables 配置的三层规则 (包含 conntrack),所以开启这个参数就能够解决上述 Service 同节点通信问题,这也是为什么在 Kubernetes 环境中,大多都要求开启 bridge-nf-call-iptables 的原因。

resolv.conf 有古怪

dnsutils

1 | |

coredns log

1 | |

可以看出pod的resolv.conf 文件有个莫名奇妙的openstacklocal,而且直接去掉。可以才可以正常解析不会报错

1 | |

pod的dns配置其实由kubelet生成,主要参数有

1 | |

可以通过docker inspect kubelet |less查看启动参数和配置

从k8s 1.23GetPodDNS得知,默认ClusterFirst策略,会结合以上参数生成对应pod配置,详细源码分析参考

1 | |

所以这个 openstacklocal搜索域,肯定从宿主上引入,查看宿主机上的/etc/resolv.conf,发现根本修改不了,被其他程序占用了

1 | |

根据重新夺回对 /etc/resolv.conf 的控制权,和以上信息,知道被systemd-resolved占用了

修改完重启kubelet,对应的pod,coredns

coredns 可能会报错

1 | |

因为dns设置了包含127.0.x.x导致的,宿主的/etc/resolv.conf 去掉nameserver 127.0.x.x,修改完做对应重启