原因

kafka连接有问题,修复这个问题后,巨量数据涌入redis,rdb和aof文件飙到48G

背景

RDB snapshots of your dataset at specified intervals

-

适合备份还原

-

启动快

-

可能会丢最新的数据,在不正常停止工作的情况下

-

如果数据集很大且CPU性能不佳,则可能导致Redis停止为客户端服务几毫秒甚至一秒钟

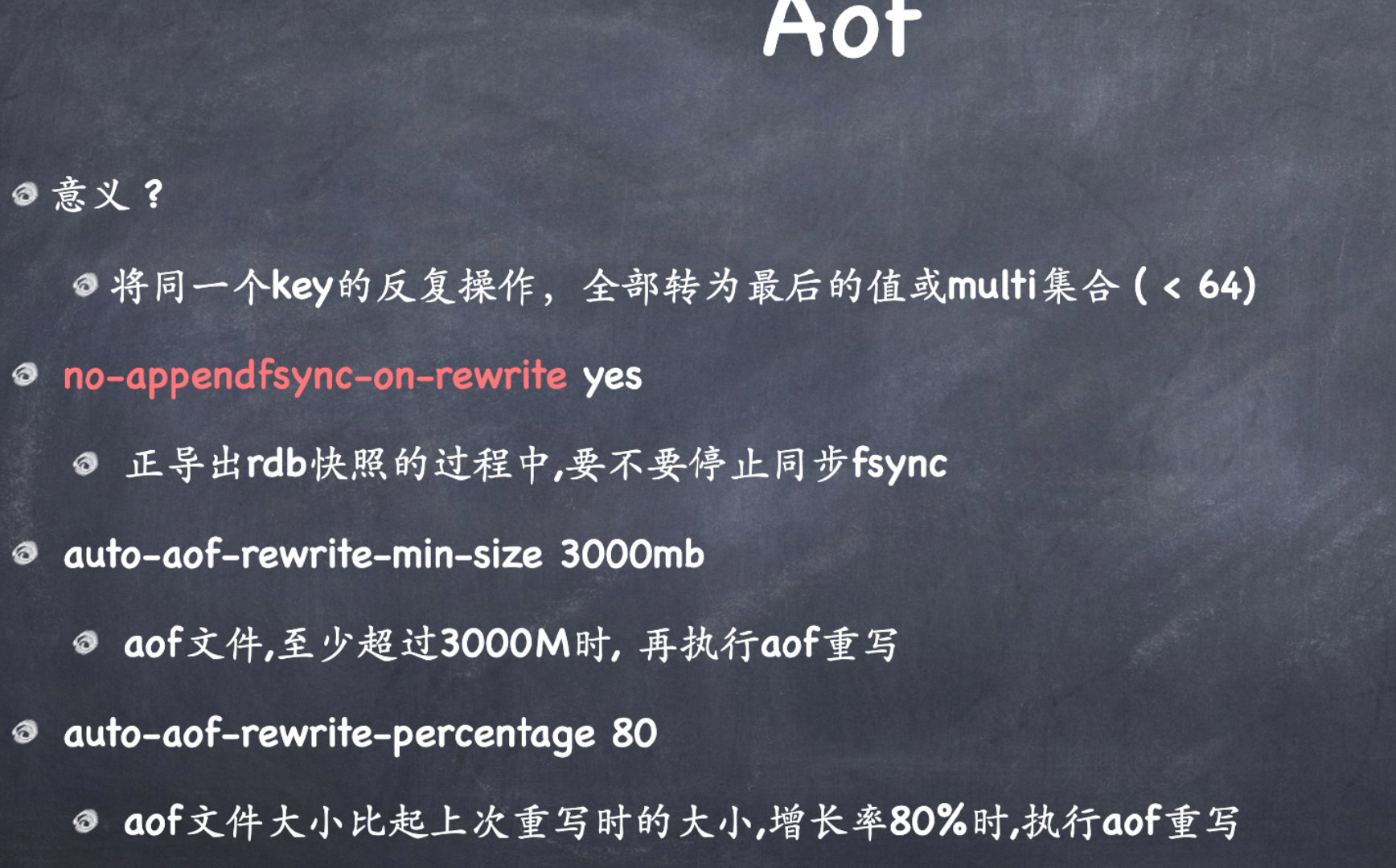

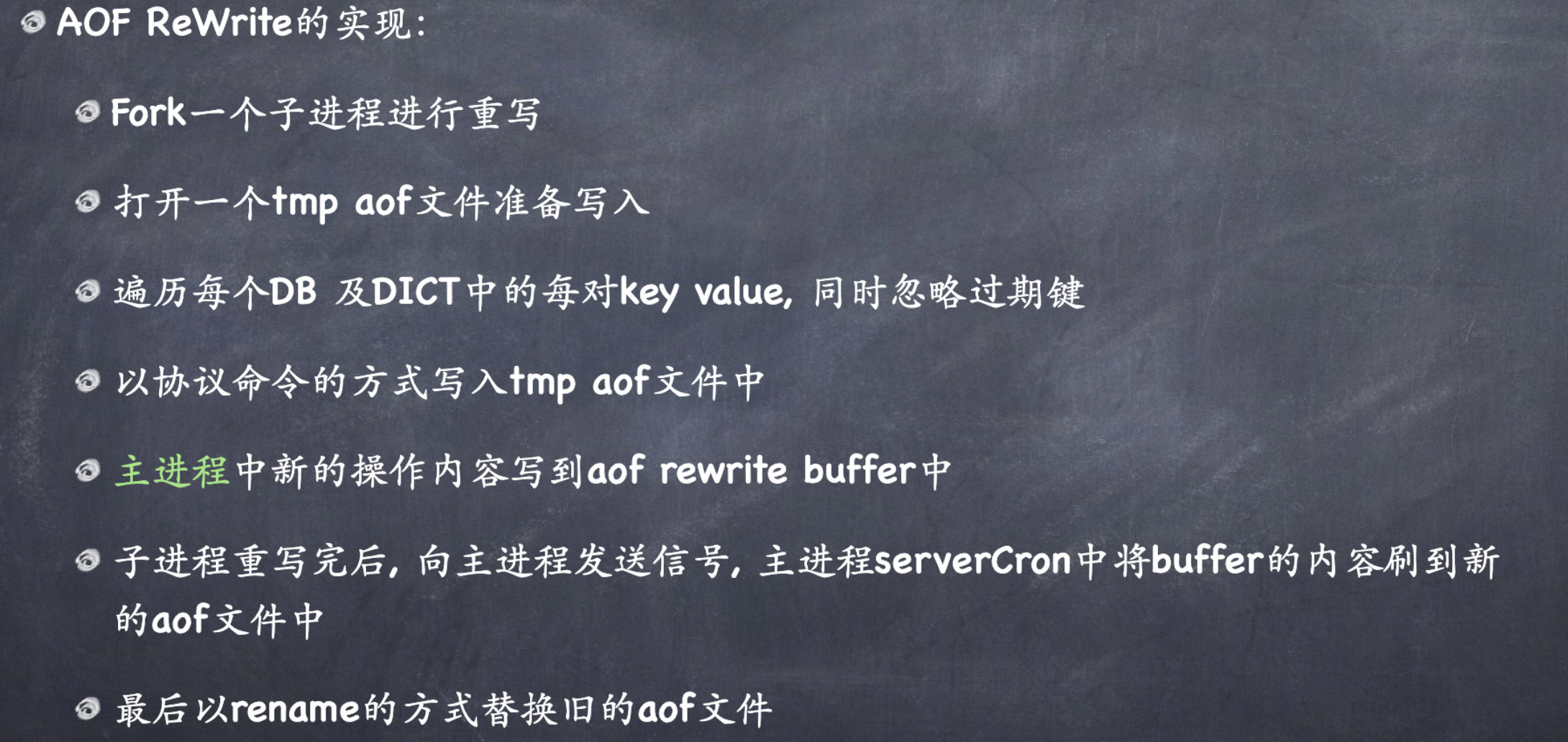

AOF

- The AOF persistence logs every write operation received by the server

- played again at server startup

- reconstructing the original dataset

- able to rewrite the log in the background when it gets too big

错误分析

强制关闭Redis快照导致不能持久化

1 | |

强制关闭Redis快照导致不能持久化,该方法只能忽略错误

1 | |

AOF fsync 时间太长

具体要看redis日志

1 | |

- 磁盘速度慢

- 短的时间内写入了太多数据

- 内存不足

LOADING Redis is loading the dataset in memory

输入任何的命令都报这个,aof文件太大导致起来很慢

- 直接不要aof文件

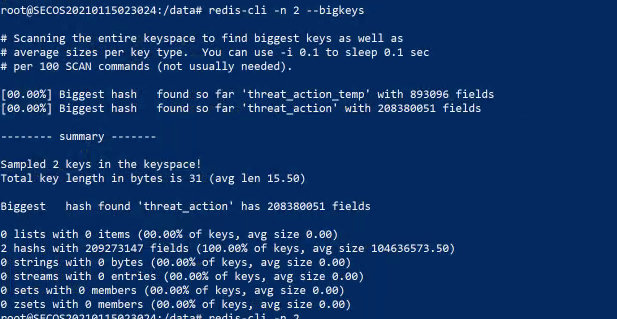

- 查看db里面大key

redis-cli -n db_number --bigkeys

- 查看某个key序列化后的长度

debug object myhash计算serializedlength的代价相对高。所以如果要统计的key比较多,就不适合这种方法

连接上redis后执行如下命令 serializedlength(将key保存为rdb文件时使用了该算法)

1 | |

- 查看正在写的内容

MONITOR - 等redis加载完aof

BGSAVE 和 BGREWRITEAOF

BGSAVE

- 通常,立即返回OK。后台跑save(持久化到硬盘),不会阻塞客户端服务

- error 已经有BGSAVE/非后台保存的操作(AOF rewrite)在跑,

BGREWRITEAOF

可以缩小aof文件,还没有后台进程进行持久化重写,才会由Redis触发