Hadoop

- Local (Standalone) Mode 本地(独立)模式 (默认情况)

- Pseudo-Distributed Mode 伪分布式模式

- Fully-Distributed Mode 全分布式模式

伪分布式模式

准备工作

.ssh

检查〜/ .ssh / id_rsa和〜/ .ssh / id_rsa.pub文件是否存在,以验证是否存在ssh localhost密钥。 如果这些存在继续前进,如果不存在

1 | |

系统配置

System Preferences -> Sharing, change Allow access for: All Users

ssh login

ssh: connect to host localhost port 22: Connection refused 远程登录已关闭

1 | |

open port 22

1 | |

install hadoop

3.1.1 version 不同版本的配置看 官方文档 Single Node Setup

1 | |

hadoop配置

go to /usr/local/Cellar/hadoop/3.1.1/libexec/etc/hadoop

hadoop-env.sh

获取JAVA_HOME

1 | |

加入hadoop-env.sh

1 | |

core-site.xml

1 | |

hdfs-site.xml

1 | |

yarn 配置

mapred-site.xml

1 | |

yarn-site.xml

1 | |

启动关闭

格式化

1 | |

hadoop

1 | |

Permission denied: user=dr.who, access=READ_EXECUTE, inode="/tmp"

core-site.xml

1 | |

或者

hdfs-default.xml

dfs.permissions.enabled=true #是否在HDFS中开启权限检查,默认为true

yarn

1 | |

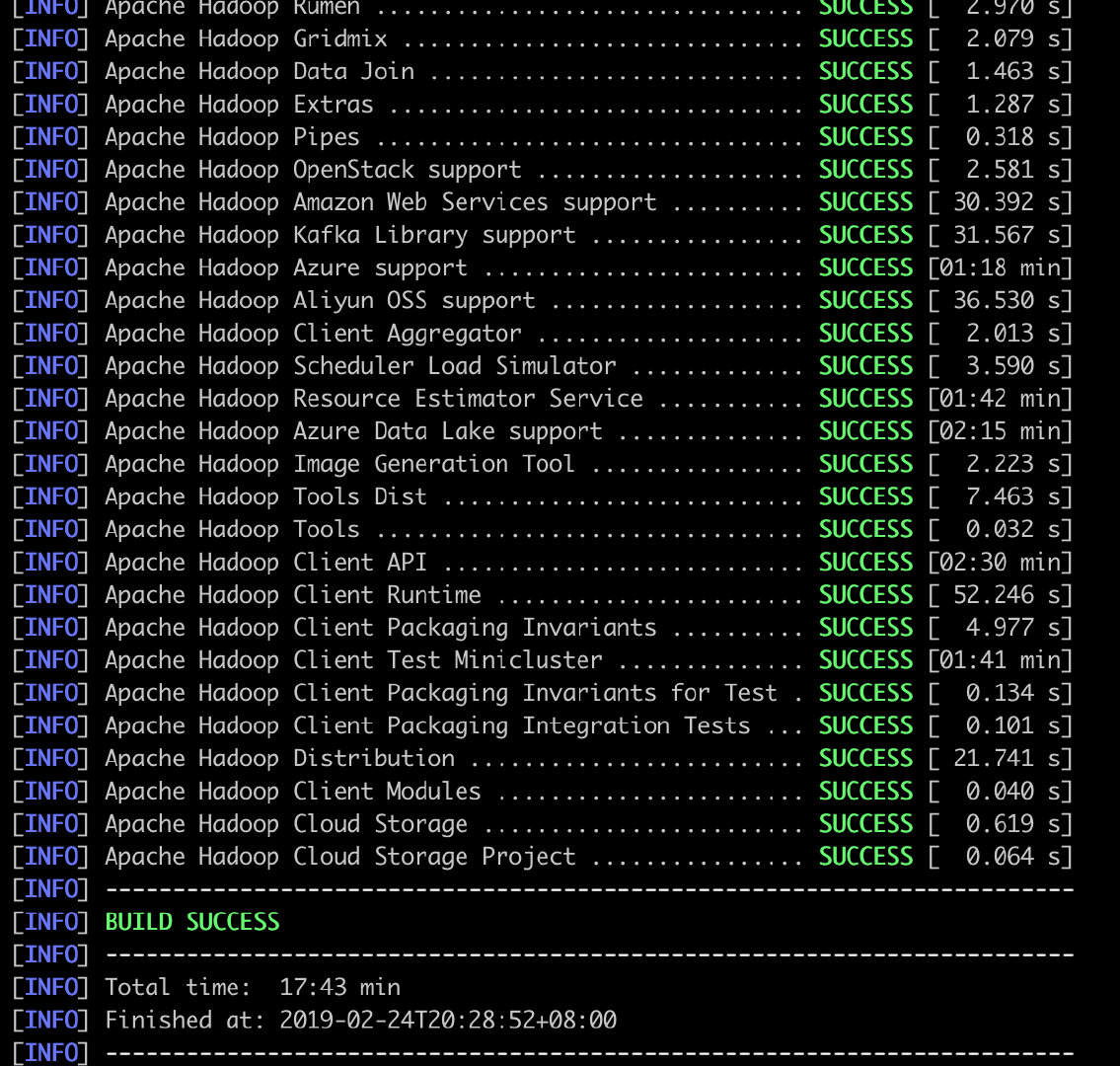

源码编译

1 | |

必须是

protobuf@2.5安装后按照说明配置好PATHprotoc version is 'libprotoc 3.6.1', expected version is '2.5.0'

hadoop checknative -a

mvn package -Pdist,native -DskipTests -Dtar -e

error

Native build fails on macos due to getgrouplist not found

OPENSSL_ROOT_DIR

1 | |

check native

经过好久终于可以了,配置好上面的话会更快。时间主要花在下载maven上面,老是ssl error

复制native libraries到

1 | |

check build

1 | |

Spark

brew install apache-spark scala

2.4.0 version

在环境配置上有HADOOP_CONF_DIR才能用spark-shell --master yarn

1 | |

不要忘记source

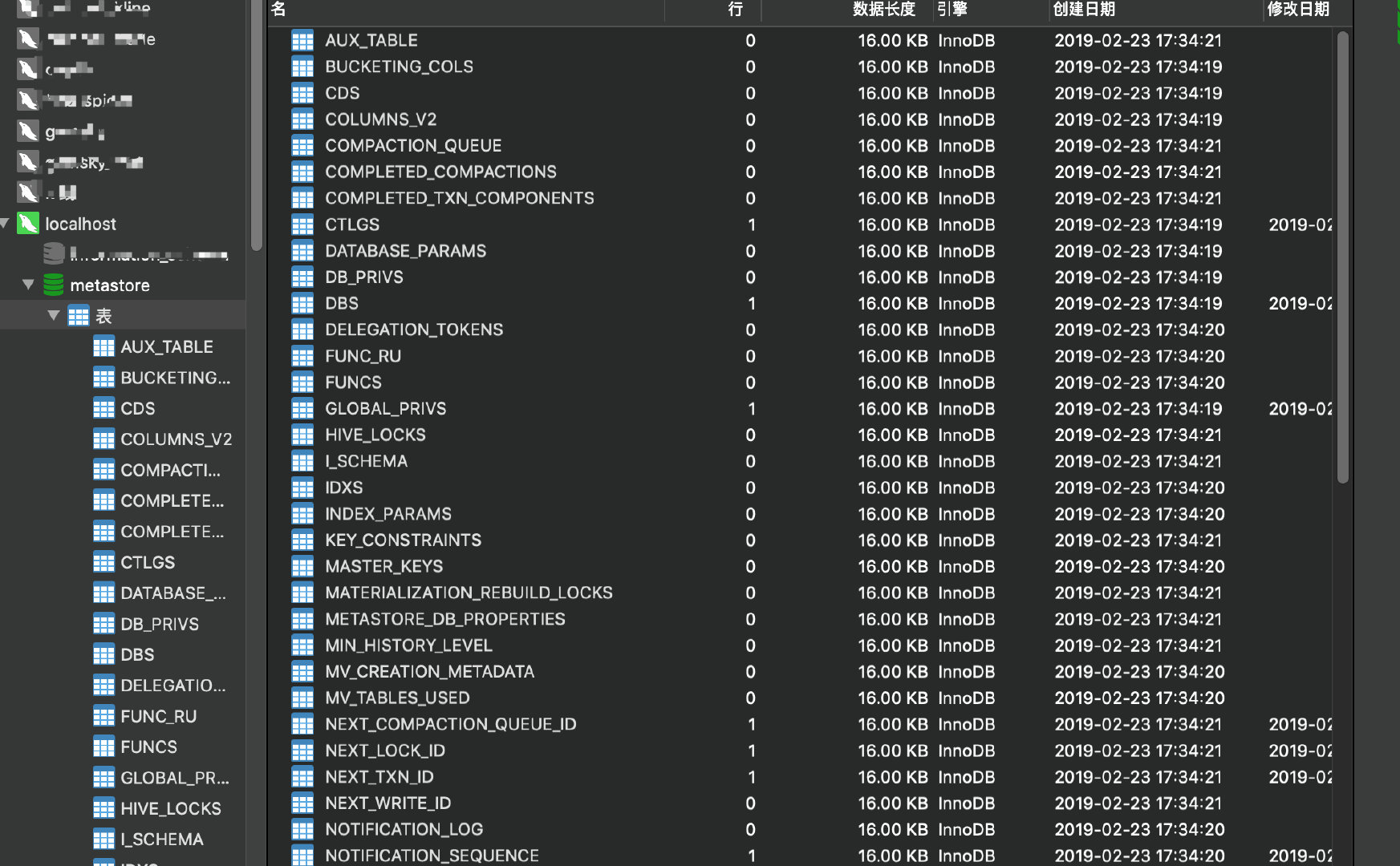

Hive

brew install hive

3.1.1 version

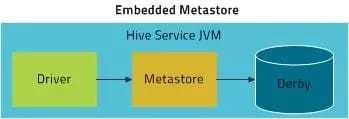

- Embedded mode (Derby) 内嵌模式 (实验)

默认此模式下,Metastore使用Derby数据库,数据库和Metastore服务都嵌入在主HiveServer进程中。当您启动HiveServer进程时,两者都是为您启动的。此模式需要最少的配置工作量,但它一次只能支持一个活动用户,并且未经过生产使用认证。

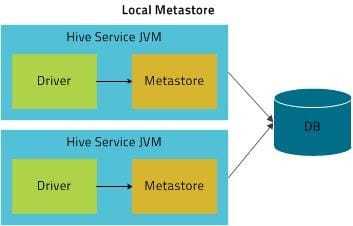

- Local mode 本地元存储

在本地模式下,Hive Metastore服务在与主HiveServer进程相同的进程中运行,但Metastore数据库在单独的进程中运行,并且可以位于单独的主机上。嵌入式Metastore服务通过JDBC与Metastore数据库通信。

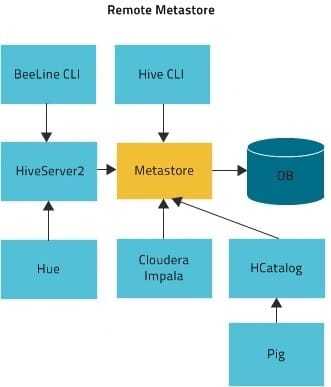

- Remote mode 远程元存储(推荐)

通过Thrift连接clinet和远程metastore服务

hive.metastore.uris,metastore服务通过jdbc连接db’javax.jdo.option.ConnectionURL’ 远程元存储需要单独起metastore服务,然后每个客户端都在配置文件里配置连接到该metastore服务。远程元存储的metastore服务和hive运行在不同的进程里。单独的主机上运行HiveServer进程可提供更好的可用性和可伸缩性。

metastore 支持常用的数据库

- Derby

- MySQL

- MS SQL Server

- Oracle

- Postgres

mysql 配置

jdbc

下载mysql-connector-java.jar

cp jar /usr/local/Cellar/hive/3.1.1/libexec/lib

1 | |

set up mysql

Configuring the Hive Metastore

1 | |

hive conf

1 | |

先看一下hive-site.xml有没有报错艹尼玛 apache !!!!!再修改

错误处理

:3210定位1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34Exception in thread "main" java.lang.RuntimeException: com.ctc.wstx.exc.WstxParsingException: Illegal character entity: expansion character (code 0x8 at [row,col,system-id]: [3210,96,"file:/usr/local/Cellar/hive/3.1.1/libexec/conf/hive-site.xml"] at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:3003) at org.apache.hadoop.conf.Configuration.loadResources(Configuration.java:2931) at org.apache.hadoop.conf.Configuration.getProps(Configuration.java:2806) at org.apache.hadoop.conf.Configuration.get(Configuration.java:1460) at org.apache.hadoop.hive.conf.HiveConf.getVar(HiveConf.java:4990) at org.apache.hadoop.hive.conf.HiveConf.getVar(HiveConf.java:5063) at org.apache.hadoop.hive.conf.HiveConf.initialize(HiveConf.java:5150) at org.apache.hadoop.hive.conf.HiveConf.<init>(HiveConf.java:5093) at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jCommon(LogUtils.java:97) at org.apache.hadoop.hive.common.LogUtils.initHiveLog4j(LogUtils.java:81) at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:699) at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:683) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:498) at org.apache.hadoop.util.RunJar.run(RunJar.java:318) at org.apache.hadoop.util.RunJar.main(RunJar.java:232) Caused by: com.ctc.wstx.exc.WstxParsingException: Illegal character entity: expansion character (code 0x8 at [row,col,system-id]: [3210,96,"file:/usr/local/Cellar/hive/3.1.1/libexec/conf/hive-site.xml"] at com.ctc.wstx.sr.StreamScanner.constructWfcException(StreamScanner.java:621) at com.ctc.wstx.sr.StreamScanner.throwParseError(StreamScanner.java:491) at com.ctc.wstx.sr.StreamScanner.reportIllegalChar(StreamScanner.java:2456) at com.ctc.wstx.sr.StreamScanner.validateChar(StreamScanner.java:2403) at com.ctc.wstx.sr.StreamScanner.resolveCharEnt(StreamScanner.java:2369) at com.ctc.wstx.sr.StreamScanner.fullyResolveEntity(StreamScanner.java:1515) at com.ctc.wstx.sr.BasicStreamReader.nextFromTree(BasicStreamReader.java:2828) at com.ctc.wstx.sr.BasicStreamReader.next(BasicStreamReader.java:1123) at org.apache.hadoop.conf.Configuration$Parser.parseNext(Configuration.java:3257) at org.apache.hadoop.conf.Configuration$Parser.parse(Configuration.java:3063) at org.apache.hadoop.conf.Configuration.loadResource(Configuration.java:2986) ... 17 morejava.net.URISyntaxException: Relative path in absolute URI: ${system:java.io.tmpdir%7D/$%7Bsystem:user.name%7D

启动HIVE时java.net.URISyntaxException

将以下内容放在hive-site.xml的开头

1 | |

hive-site.xml

1 | |

初始化metastore & 测试

1 | |

ERROR

schematool -dbType mysql -initSchema

- https://cwiki.apache.org/confluence/display/Hive/Hive+Schema+Tool

- https://stackoverflow.com/questions/42209875/hive-2-1-1-metaexceptionmessageversion-information-not-found-in-metastore

1 | |

因为没有正常启动Hive 的 Metastore Server服务进程。

1 | |

1 | |

Hive on Spark

Hive-on-MR is deprecated in Hive 2 and may not be available in the future versions. Consider using a different execution engine (i.e. spark, tez) or using Hive 1.X releases.

1 | |

版本问题

1 | |

spark 通过hive.metastore.uris 连接hive

cp hive-site.xml /usr/local/Cellar/apache-spark/2.4.0/libexec/conf

启动Hive Metastore Server

hive --service metastore

1 | |