AutoEncoder

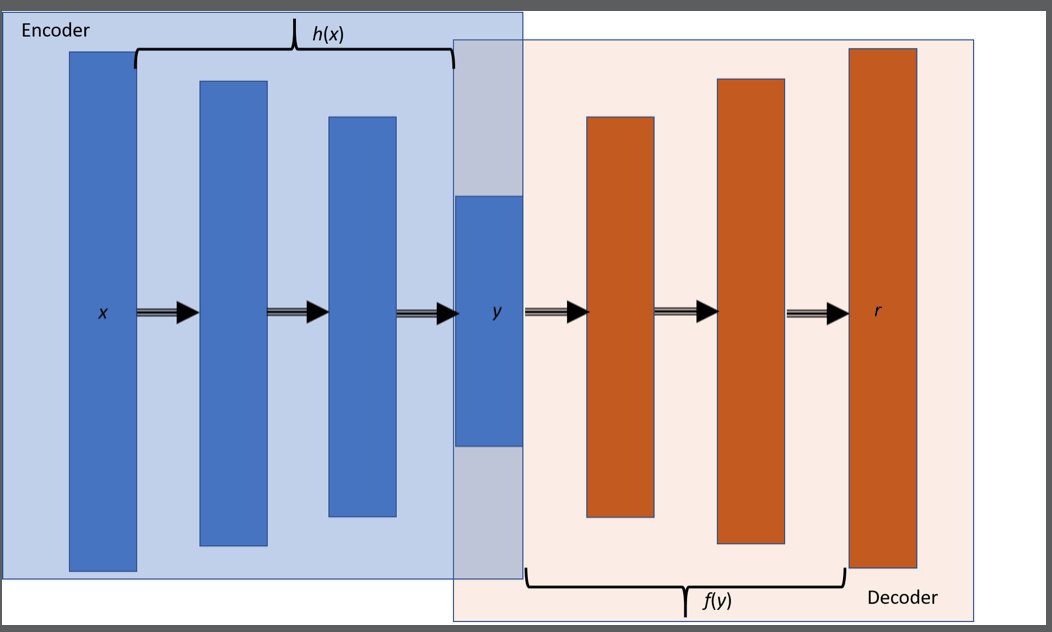

AE

- encode input x, and encodes it using a transformation h to encoded signal y

- decode

weights 可以share, encode decode的weights 可以简单地反过来用

损失函数的不一样,hidden layer不一样 => 不同的AE

损失函数的不一样,hidden layer不一样 => 不同的AE

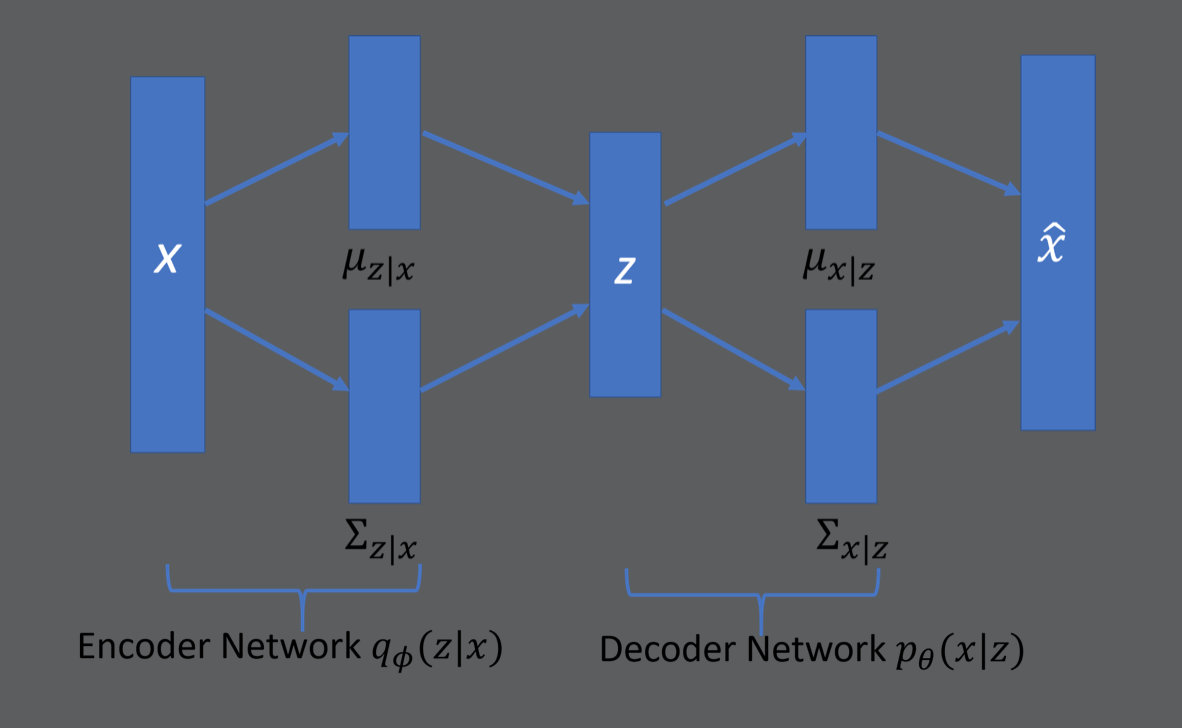

Variational Autoencoder

变分自编码器(VAE)

根据很多个样本,学会生成新的样本

学会数据 x 的分布 P (x),这样,根据数据的分布就能轻松地产生新样本但数据分布的估计不是件容易的事情,尤其是当数据量不足的时候。

VAE对于任意d维随机变量,不管他们实际上服从什么分布,我总可以用d个服从标准高斯分布的随机变量通过一个足够复杂的函数去逼近它。

- AE 是还原x

- VAE是产生新的x

训练完后VAE,使用decode来生成新的X

训练完后VAE,使用decode来生成新的X

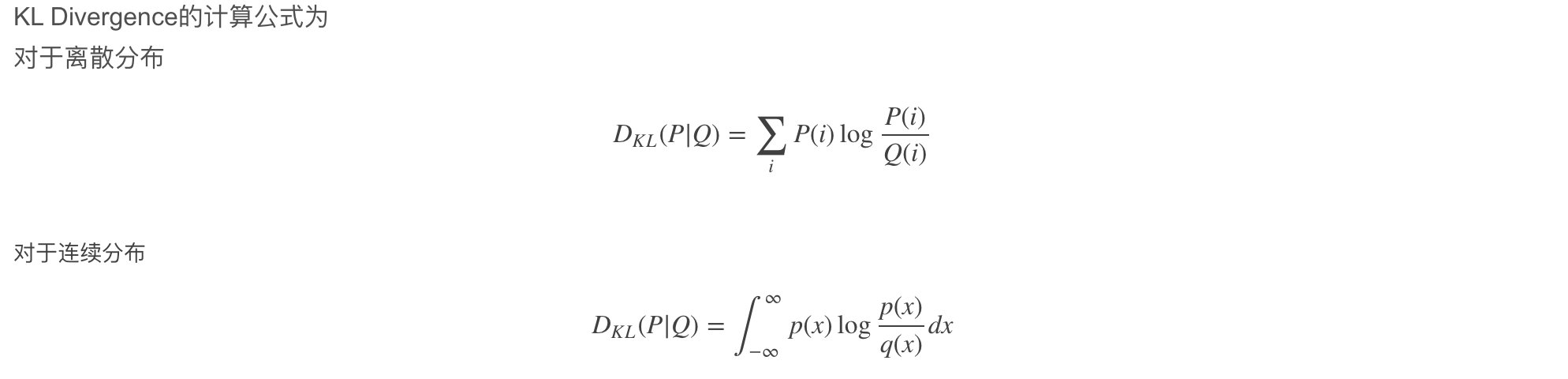

KL 散度(Kullback–Leibler Divergence) 来衡量两个分布的差异,或者说距离

1 | |

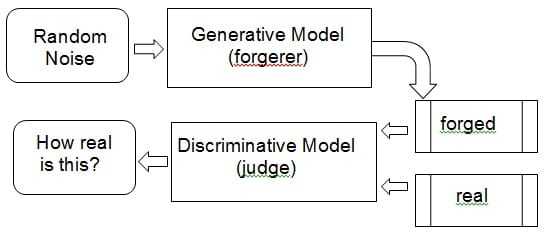

Generative Adversarial Network

GAN 生成式对抗网络

同时训练两个神经网络

- 生成器(Generator):记作 G,通过对大量样本的学习,输入一些随机噪声能够生成一些以假乱真的样本

- 判别器(Discriminator):记作D,接受真实样本和G生成的样本,并进行判别和区分

- G 和 D 相互博弈,通过学习,G 的生成能力和 D 的判别能力都逐渐 增强并收敛,交替地训练,避免一方过强

核心代码

1 | |

核心Loss

1 | |

Train 同时训练

1 | |

使用CNN的话没有GPU加速就别搞了,用CPU慢,超级慢。要非常注意shape

用普通FC训练 G生成出来的结果

1 | |